I’m not a big fan of using IDEs. I spent my first years as an embedded engineer trying to get rid of them, and even though nowadays I use VS Code a lot, I try to use as few plugins as possible. I strongly believe that a terminal is the only thing you really need (more or less). However, most people, due to its popularity, use VS Code for almost everything, so I’ve decided to write this guide for those who, unlike me, try to use it as a one-stop solution.

By this point, I hope it's clear that a Docker container is essentially like having a virtual machine. You need to understand this concept clearly. Also, keep in mind that VS Code is just a code editor—it allows us to write code, and that's it. There is a plugin called Dev Containers that allows you to handle Docker images and containers within VS Code. Go ahead and install it.

As always lets start with a simple example to warm you up, clone our template project and open VSCode in the folder we just clone

$ git clone https://modularmx-admin@bitbucket.org/modularmx/template-g0.git project

$ code -r projectWe need to create a configuration file called .devcontainer.json, which can be placed inside a folder named .devcontainer, or at the root of the project —it’s up to you. I’ll choose to place it in the root folder of my project.

$ code .devcontainer.json In this file, we need to specify either the image we want to use or the Dockerfile we use to build the image. Yes, Dev Containers allows us to build our image, similar to Docker Compose. Let’s go with the second option.

{

"name": "test", // Name of the dev container

"dockerFile": "dockerfile" // Name the Dockerfile

}We can use the following Dockerfile for this example. Typically, the Dockerfile is placed in the same directory as the .devcontainer.json file. However, if you choose a different directory, make sure to include the relative path in the Dockerfile name. For example, "dockerFile": "path/to/file/dockerfile-name".

# Fetch a new image from archlinux

FROM ubuntu

#install build tools from our stm32g0 microcontroller and openocd to flash our device

RUN apt-get update && apt-get -y install make openocd wget xz-utils

# libtinfo5 and libncurses5 are needed to run our arm toolchain, download from the archive repo and install

RUN wget http://archive.ubuntu.com/ubuntu/pool/universe/n/ncurses/libtinfo5_6.2-0ubuntu2.1_amd64.deb && \

wget http://archive.ubuntu.com/ubuntu/pool/universe/n/ncurses/libncurses5_6.2-0ubuntu2.1_amd64.deb && \

wget http://archive.ubuntu.com/ubuntu/pool/universe/n/ncurses/libncursesw5_6.2-0ubuntu2.1_amd64.deb && \

dpkg -i ./libtinfo5_6.2-0ubuntu2.1_amd64.deb && dpkg -i ./libncurses5_6.2-0ubuntu2.1_amd64.deb && dpkg -i ./libncursesw5_62-0ubuntu2.1_amd64.deb && \

rm libtinfo5_6.2-0ubuntu2.1_amd64.deb libncursesw5_6.2-0ubuntu2.1_amd64.deb libncurses5_6.4-2_amd64.deb

# Download the ARM GNU toolchain using wget, decompress, and set its route to PATH variable

RUN wget https://developer.arm.com/-/media/Files/downloads/gnu/13.3.rel1/binrel/arm-gnu-toolchain-13.3.rel1-x86_64-arm-none-eabi.tar.xz && \

tar -xvf arm-gnu-toolchain-13.3.rel1-x86_64-arm-none-eabi.tar.xz -C /home/ubuntu && \

rm arm-gnu-toolchain-13.3.rel1-x86_64-arm-none-eabi.tar.xz && \

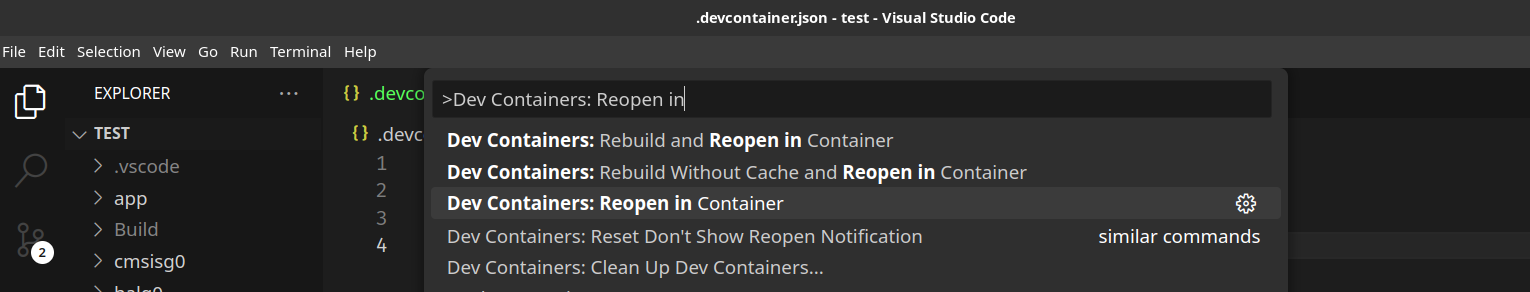

echo 'export PATH=/home/ubuntu/arm-gnu-toolchain-13.3.rel1-x86_64-arm-none-eabi/bin:$PATH' >> ~/.bashrcIn VS Code, press Ctrl + Shift + p, then type Dev Containers: Reopen in Container and hit Enter. Your VS Code will reload.

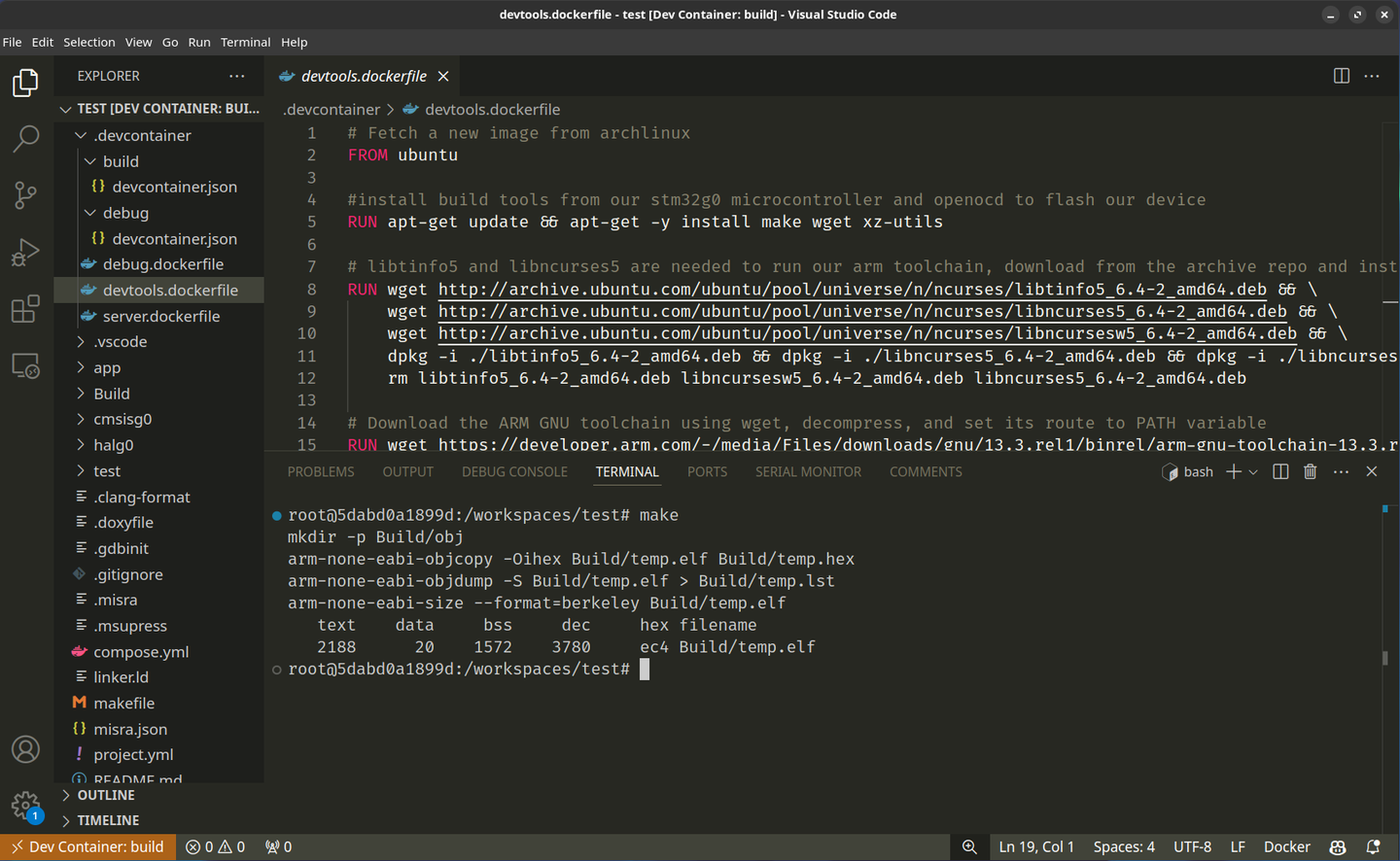

Then, it will reopen, but first, it will build the image and run the container. If you open the VS Code terminal, you'll notice that it opens inside the container, with your project’s root directory shared with the container. You can use the ls command to verify this, and if you'd like, you can run make as well.

[root@b6594a46e2f2 project]# ls

/workspaces/projectI’m not impressed; we already did that in the previous steps, so I don’t see the point of this new plugin. You’re right, but keep in mind that this plugin is not meant to replace tools like Docker or Docker Compose. It’s designed to enhance the developer experience. Here’s an example:

You may have noticed that when we reopened VS Code, it was complaining about Git. We’re using a Git repository, but Git isn’t installed in our container. Let’s fix this by modifying the .devcontainer.json file.

{

// Name of the dev container

"name": "test",

// Dockerfile to use to build the image

"dockerFile": "dockerfile",

// List of extensions to install from the oficial repository

"features": {

"ghcr.io/devcontainers/features/git:1": {}

},

}Rebuild your dev container (VS Code will show a pop-up window suggesting the option, or you can press Ctrl + Shift + P and search for the appropriate command). Then, try again.

[root@b6594a46e2f2 project]# git --version

git version 2.43.0Hmm, we could do that in our image. Yes, we could, but here’s the scenario: maybe you don’t have control over the image you’re using and can’t modify the original Dockerfile. We installed Git using the official Microsoft feature repo, but in case you need to install something that isn’t available there, we can use the postCreateCommand, as shown in the example below. Here, we install Doxygen just for fun.

{

// Name of the dev container

"name": "test",

// Dockerfile to use to build the image

"dockerFile": "dockerfile",

// List of extensions to install from the oficial repository

"features": {

"ghcr.io/devcontainers/features/git:1": {}

},

// execute commands after the container is created

"postCreateCommand": "apt-get update && apt-get install -y doxygen"

}Dev Containers is actually a specification created by Microsoft but released as open source, so other code editors can implement similar functionality. Here is the official documentation in case you want to see all of its options. https://containers.dev/

Debugging

Well, it looks good so far, but what about debugging? VS Code has a nice plugin to graphically handle GDB, and it’s really nice, by the way. If you remember, to connect an instance of OpenOCD running in a container to our board, we need to run the container with some options like this:

$ docker run -it --rm --device=/dev/bus/usb:/dev/bus/usb openocdWe can do the same with Dev Containers, but we're not going that route. Why? Because we can use Docker Compose, which is more universal. Besides, imagine you’re in a team where not everyone is using VS Code, and the Compose file is already there.

Now, let's write the following file to run our previous container using Docker Compose indicating to map the pass-trough the USB port:

services:

devtools: # container name been used by the image to connect to openocd

build:

context: . # directory where the dockefile is located

dockerfile: dockerfile # docker filename to build image

devices: # device mapping to usb ports

- /dev/bus/usb:/dev/bus/usb

volumes: # share current directory . with contianer /workspaces directory

- type: bind

source: ./

target: /workspaces

command: # keep the container running

sleep infinityWe’re not going to run Compose manually. Instead, let’s use our Dev Container file. Still we are going to Compose to build the image using the docker file we create.

Also, we need to install the Cortex-Debug plugin—but inside our container! How can we do that? It’s easy. There is a JSON property we can use in our devcontainer.json file called customizations. Here, we can specify packages for VS Code that will only exist when running inside the container. You can even set specific themes!

{

// Name of the dev container

"name": "test",

// Dockerfile to use to build the image

"dockerComposeFile": "compose.yml",

// indicate the container to run for the working directory

"service": "devtools",

// indicate the working directory inside the container

"workspaceFolder": "/workspaces/${localWorkspaceFolderBasename}",

// List of extensions to install from the oficial repository

"features": {

"ghcr.io/devcontainers/features/git:1": {}

},

// execute commands after the container is created

"postCreateCommand": "apt-get update && apt-get install -y doxygen",

// set some usefull vs code configuraation only exsiting in our contianer

"customizations": {

"vscode": {

// Add some extensions

"extensions": [

"mcu-debug.debug-tracker-vscode",

"ms-vscode.cpptools",

"marus25.cortex-debug"

],

// Set some settings

"settings": {

"workbench.colorTheme": "Monokai"

}

}

}

}Now, let’s create the configuration file for our debugging session, launch.json. It should look something like this. You can refer to what we wrote in the previous post for more details.

{

"version": "1.12.1",

"configurations": [

{

"type": "cortex-debug",

"request": "launch",

"name": "Debug (OpenOCD)",

"servertype": "openocd",

"interface": "swd",

"cwd": "${workspaceRoot}",

"runToEntryPoint": "main",

"executable": "${workspaceRoot}/Build/temp.elf",

"device": "STM32G0B1RE",

"configFiles": [

"board/st_nucleo_g0.cfg"

],

"svdFile": "${workspaceRoot}/STM32G0B1.svd",

"serverpath": "/usr/bin/openocd",

"showDevDebugOutput": "raw"

}

]

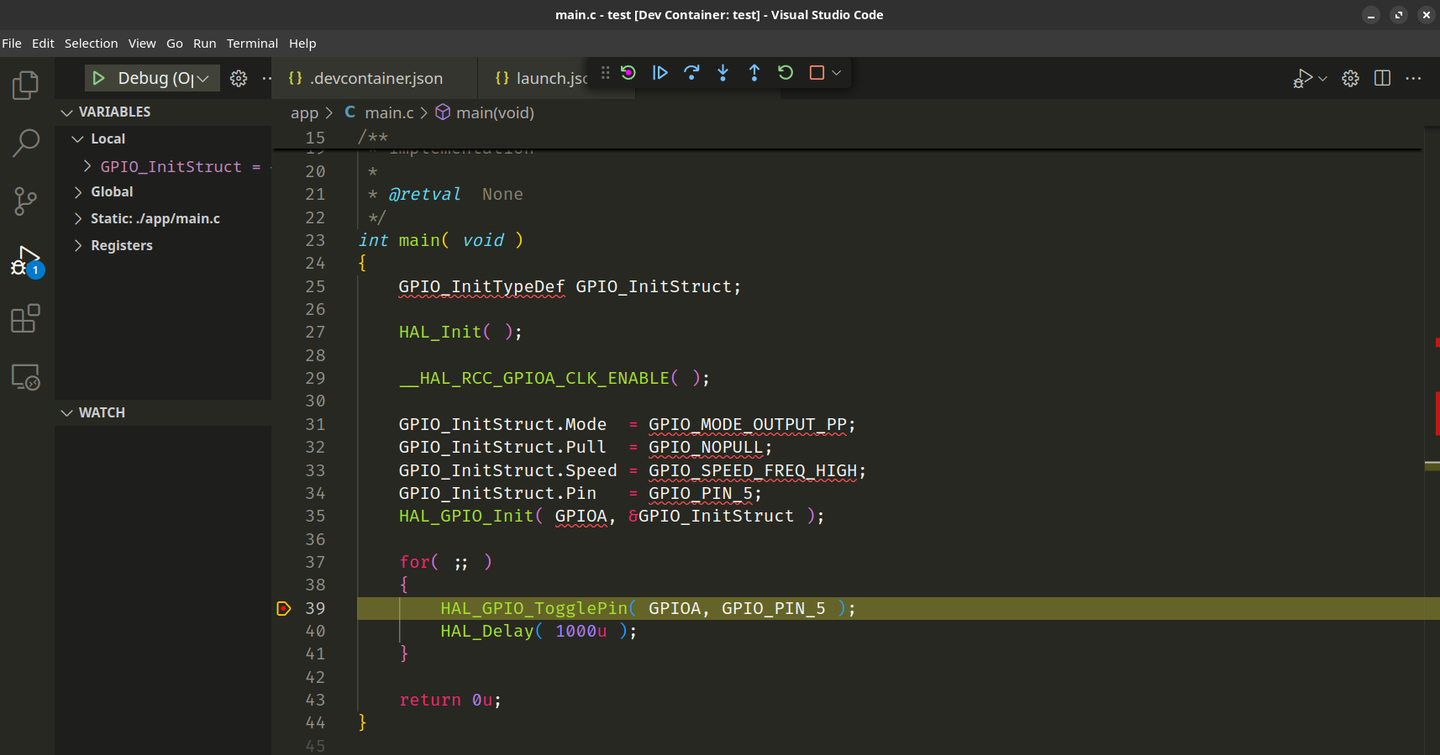

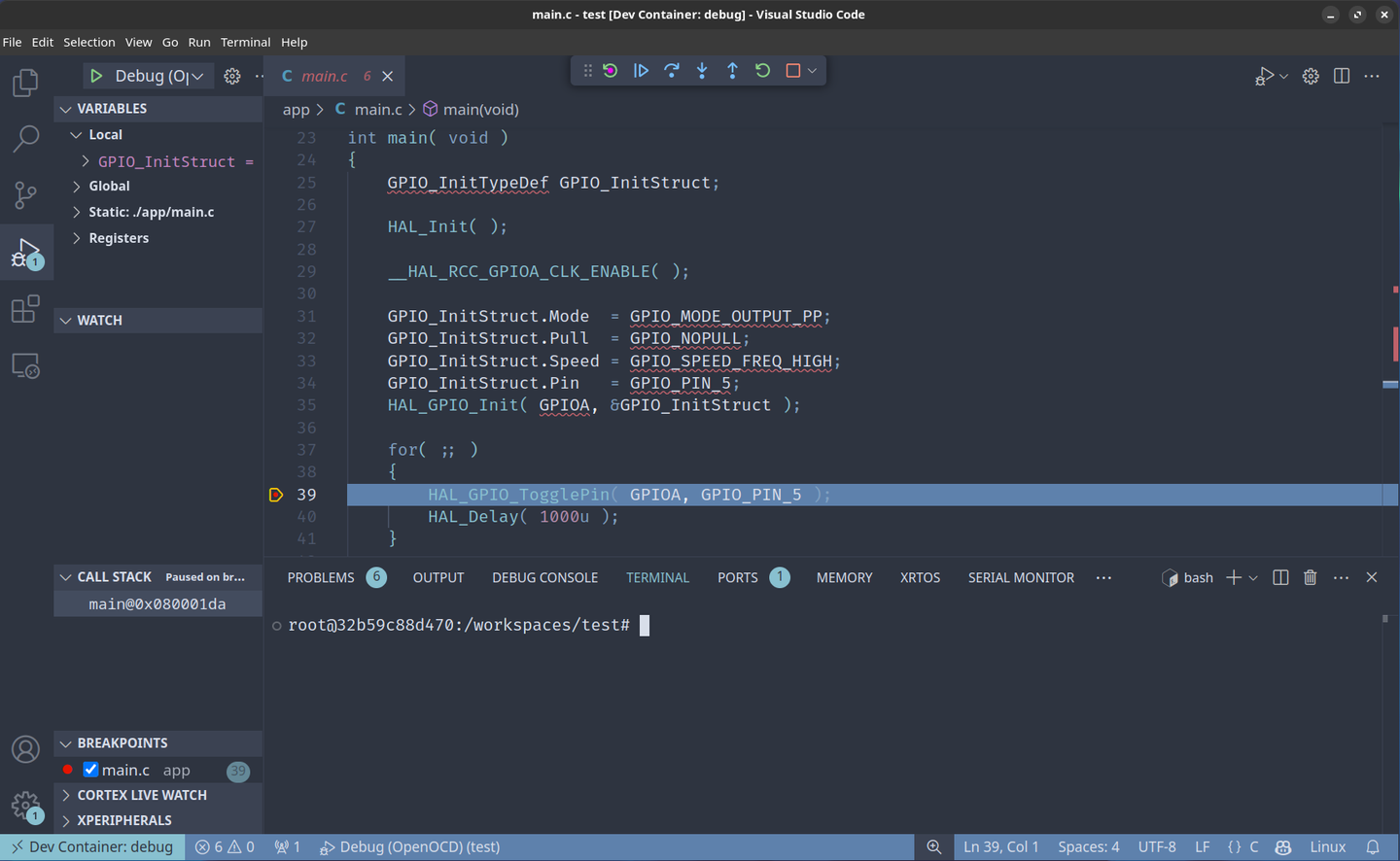

}Launch the cortex debug as usual and et voila!!!. Btw here is anice video for you https://youtu.be/b1RavPr_878?si=2INVTdmBpoes5JU7

Using multiple containers

Hey Diego, I remember you mentioned that we should use multiple containers and not put all the tools we need into a single container. Hmm, yes, but it will depend on your needs, of course. Let's move OpenOCD to a separate container. Also, let's create a new directory to store our development container configuration and the Dockerfiles we will need. This new directory should be called .devcontainer

$ mkdir .devcontainer

$ mv .devcontainer.json .devcontainer/devcontainer.json

$ mv dockerfile .devcontainer/devtools.dockerfile

$ code .devcontainer/server.dockerfileIn our new server.dockerfile, we will include the instructions to install OpenOCD (by this time, you should know what to write). Don’t forget to remove OpenOCD from the previous container, now called devtools.dockerfile.

# Fetch a new image from archlinux

FROM alpine

# install build tools from our stm32g0 microcontroller and openocd to flash our device

RUN apk add --no-cache openocd

# run openocd as soon the contanier start and bind to the same container IP address

# using its name

ENTRYPOINT [ "openocd", "-f", "board/st_nucleo_g0.cfg", "-c", "bindto open_server" ]Now, in the compose.yml file, let's separate the OpenOCD service, publish the port, provide a custom network, and assign a static IP. This is necessary because our Cortex-Debug plugin will need this information; otherwise, it won't know how to connect to the OpenOCD container.

services:

open_server: # container name been used by the image to connect to openocd

build:

context: . # directory where the dockefile is located

dockerfile: .devcontainer/server.dockerfile # docker filename for openocd

ports: # port mapping

- 3333:3333

devices: # device mapping to usb ports

- /dev/bus/usb:/dev/bus/usb

networks:

static_net: # use network static_net

ipv4_address: 172.25.0.3 # assing ip address to container

devtools: # container name been used by the image to build and debug project

build:

context: . # directory where the dockefile is located

dockerfile: .devcontainer/devtools.dockerfile # docker filename to build image

volumes: # share current directory . with contianer /workspaces directory

- type: bind

source: ./

target: /workspaces

depends_on:

- open_server

networks:

static_net: # use network static_net

ipv4_address: 172.25.0.4 # assing ip address to container

command: # keep the container running

sleep infinity

networks:

static_net: # network name to be used by containers

driver: bridge # network driver

ipam:

config: # network configuration

- subnet: 172.25.0.0/16 # network subnet

gateway: 172.25.0.1 # network gatewayThere is nothing to modify in the .devcontainer file, except for changing its name to devcontainer.json (without the initial period, since it is now inside the .devcontainer folder). and indicate our compose file is in a upper directory.

// Dockerfile to use to build the image

"dockerComposeFile": "../compose.yml",However, we do need to make changes to our launch.json file. Since OpenOCD is now running in a different container (which essentially means it's running on a different machine), you will need to change the server type to external and add the OpenOCD container's IP address with: "gdbTarget": "172.25.0.3:3333". You can also remove any lines referencing OpenOCD.

{

"version": "0.2.0",

"configurations": [

{

"type": "cortex-debug",

"request": "launch",

"name": "Debug (OpenOCD)",

"servertype": "external",

"gdbTarget": "172.25.0.3:3333",

"interface": "swd",

"cwd": "${workspaceRoot}",

"runToEntryPoint": "main",

"executable": "${workspaceRoot}/Build/temp.elf",

"device": "STM32G0B1RE",

"svdFile": "${workspaceRoot}/STM32G0B1.svd",

}

]

}Launch your dev container, and once you're inside, start your debugger. In this example, only the devtools container is running within VSCode. The OpenOCD container is also running, but in the background, and there is no need for the editor to interact with it. Btw your project root directory should look like this

.

├── app

├── Build

...

├── compose.yml

├── .devcontainer

│ ├── devcontainer.json

│ ├── devtools.dockerfile

│ └── server.dockerfile

└── .vscode

└── launch.jsonAnother nice video about dev container and compose https://youtu.be/p9L7YFqHGk4?si=hS2iYdUTL3wTkitk

Using multiple dev containers

In the previous example, we used up to two containers, but only one of them utilized the VSCode dev container plugin. Now, what happens if we want to synchronize two dev containers with VSCode? In this scenario, we will separate our debugger from the devtools container, resulting in three different containers: one for the debugger server, one for building the project, and the third for debugging purposes. Two of these containers will need to work with VSCode, as we want to continue using the Cortex-Debug plugin as the visual frontend

Lets write a third image to only place the gdb debugger, name this new docker file as debug.dockerfile and place it in .devcontainer folder with the following content

# Fetch a new image from archlinux

FROM archlinux:base

#install build tools for our stm32g0 microcontroller, and openocd to flash our device

RUN pacman -Sy --noconfirm arm-none-eabi-gdbThe compose configuration file now has all the three images

services:

open_server: # container name been used by the image to connect to openocd

build:

context: . # directory where the dockefile is located

dockerfile: .devcontainer/server.dockerfile # docker filename to build image

ports: # port mapping

- 3333:3333

devices: # device mapping to usb ports

- /dev/bus/usb:/dev/bus/usb

networks:

static_net: # use network static_net

ipv4_address: 172.25.0.3 # assing ip address to container

devtools: # container name been used by the image to connect to openocd

build:

context: . # directory where the dockefile is located

dockerfile: .devcontainer/devtools.dockerfile # docker filename to build image

volumes: # share current directory . with contianer /workspaces directory

- type: bind

source: ./

target: /workspaces

depends_on:

- open_server

networks:

static_net: # use network static_net

ipv4_address: 172.25.0.4 # assing ip address to container

command: # keep the container running

sleep infinity

debug: # container name been used by the image to connect to gdb

build:

context: . # directory where the dockefile is located

dockerfile: .devcontainer/debug.dockerfile # docker filename to build image

volumes: # share current directory . with contianer /workspaces directory

- type: bind

source: ./

target: /workspaces

depends_on: # run until open_server is up and running first

- open_server

networks:

static_net: # use network static_net

ipv4_address: 172.25.0.5 # assing ip address to container

command: # keep the container running

sleep infinity

networks:

static_net: # network name to be used by containers

driver: bridge # network driver

ipam:

config: # network configuration

- subnet: 172.25.0.0/16 # network subnet

gateway: 172.25.0.1 # network gatewayThe trick will be to have two devcontainer configuration files. While it’s not possible to give them different names, we can place each file in a separate subfolder with different names, like this:

.

├── app

├── Build

...

├── compose.yml

├── .devcontainer

│ ├── build

│ │ └── devcontainer.json

│ ├── debug

│ │ └── devcontainer.json

│ ├── debug.dockerfile

│ ├── devtools.dockerfile

│ └── server.dockerfile

└── .vscode

└── launch.jsonThe devcontainer file in the build folder does not need the plugins to run Cortex-Debug, and it would look like this. It's also important to assign the proper service from the docker-compose.yml configuration file.

{

// Name of the dev container

"name": "build",

// Dockerfile to use to build the image

"dockerComposeFile": "../../compose.yml",

// Service to use in the docker-compose file

"service": "devtools",

// keep conainer runnig in case you close VSCode (I'm not sure about this)

"shutdownAction": "none",

// indicate the working directory inside the container

"workspaceFolder": "/workspaces/${localWorkspaceFolderBasename}",

// List of extensions to install from the oficial repository

"features": {

"ghcr.io/devcontainers/features/git:1": {}

},

// set some usefull vs code configuraation only exsiting in our contianer

"customizations": {

"vscode": {

// Add some extensions

"extensions": [

"ms-vscode.cpptools"

],

// Set some settings

"settings": {

"workbench.colorTheme": "Monokai"

}

}

}

}The second devcontainer file needs the Cortex-Debug plugin, but we don't need Git. It's important to note the services both containers will use. Additionally, the docker-compose.yml file is now located two directories above.

{

// Name of the dev container

"name": "debug",

// Dockerfile to use to build the image

"dockerComposeFile": "../../compose.yml",

// Service to use in the docker-compose file

"service": "debug",

// keep conainer runnig in case you close VSCode (I'm not sure about this)

"shutdownAction": "none",

// indicate the working directory inside the container

"workspaceFolder": "/workspaces/${localWorkspaceFolderBasename}",

// set some usefull vs code configuraation only exsiting in our contianer

"customizations": {

"vscode": {

// Add some extensions

"extensions": [

"mcu-debug.debug-tracker-vscode",

"ms-vscode.cpptools",

"marus25.cortex-debug"

],

// Set some settings

"settings": {

"workbench.colorTheme": "Nord"

}

}

}

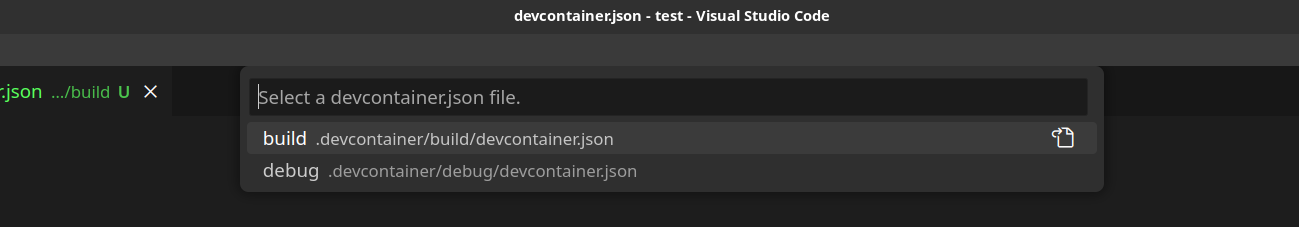

}Now, here's the tricky part: if you use Dev Containers: Reopen in Container as usual, you will see a second menu with two options corresponding to the two devcontainer files we created.

Go for the build option first and make in case you haven't. On the bottom left corner notice we are in our build container

To run the second container, press Ctrl + Shift + P, then search for Dev Containers: Switch Container. Select the debug configuration and wait, then launch the debugger. You’ll notice that I’ve chosen a different theme to distinguish between containers. You can switch between containers without any worries at all.

That's not all — there's a way to use two VSCode instances instead of just one. I would explain this, but there's a nice lady who has already done so https://youtu.be/bVmczgfeR5Y?si=DqSOQLayCa0wOeOM

Just want to clarify one thing: we’ve been building our images constantly, but this is only for demonstration and experimentation purposes. In reality, someone from the team, an expert in the "black magic" of DevOps (or EmbedOps), will be in charge of setting up the official images for our projects and distributing them to the rest of the developers, typically through a Docker registry service like Docker Hub. In other words, you won’t be building the images using Compose or the dev container plugin.