There is no modern software development without continuous integration, and Docker plays a key role in enhancing this process. I’m not going to explain what a pipeline is or how to set one up in Bitbucket —well, maybe just a little. I also assume you have experience using Git, including the most common commands like commit and push. Additionally, you should have a basic understanding of what continuous integration is.

At Modular MX, we rely heavily on Atlassian tools, and when used together, they create a very powerful workflow. This is why we’ll be using Bitbucket for this tutorial. However, if you follow the concepts, you should be able to apply them to GitHub, GitLab, or similar platforms. To get started, create a Bitbucket account —it’s free for what we’ll be doing.

Warming up with pipelines

If you've never set up a pipeline in Bitbucket before, this first part will serve as a simple introductory tutorial. You should also refer to the official documentation for further details.

Start by creating a project and then a repository in Bitbucket (do not add a README.md or .gitignore file). Once your repository is created, set up SSH access. You have no clue about SSH??, Here’s a quick guide to help you with that:

On your local machine, create a Git repository and add a simple main.c file (just something basic to start with).

#include <stdio.h>

int main( void )

{

printf( "Hello, World!\n" );

return 0;

}Also its corresponding makefile. After writing both files git add, commit and push, and leave like that for the moment

all:

gcc main.c -o main -WallIn Bitbucket, you need to set up a runner. This is essentially a small program that connects your Bitbucket repository to your local repository. You should choose a Linux Docker runner. Here’s a guide to help you set up your runner:

The last step will provide you with a command to run a Docker container that includes everything necessary to connect your local machine with your Bitbucket repository. Copy the command it gives you and save it somewhere safe.

Run the container Bitbucket gave you, here is mine, ( use your own, this one is not going to work for you )

docker container run -it -v /tmp:/tmp -v /var/run/docker.sock:/var/run/docker.sock -v /var/lib/docker/containers:/var/lib/docker/containers:ro -e ACCOUNT_UUID={bda5c83f-563b-4d5e-8431-4fda2b4f4da0} -e REPOSITORY_UUID={85642ca4-2d1b-4cc4-a5e0-4b305f6e9c30} -e RUNNER_UUID={2ff898c1-2219-5d0c-8892-5894c1891da4} -e RUNTIME_PREREQUISITES_ENABLED=true -e OAUTH_CLIENT_ID=kUhyjjqd6D61DHowftS4jKnWxznsNbbb -e OAUTH_CLIENT_SECRET=ATOA69EQldpNg11UHCDMKABUd4jhbXuvcdg1wqfKlvqhJ9_bS9hju4LtaMXgLmlpwz7XCA9F78FE -e WORKING_DIRECTORY=/tmp --name runner-2ff898c1-2219-5d0c-8892-5894c1891da4 docker-public.packages.atlassian.com/sox/atlassian/bitbucket-pipelines-runnerIf you do a docker ps you can see is just a container running like any other, we are not going to interact with it, just leave there doing its own thing, you can also run int in the background replacing the flag -it for -d

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

c7eb46721775 docker-public.packages.atlassian.com/sox/atlassian/bitbucket-pipelines-runner "/bin/sh -c ./entryp…" About an hour ago Up About an hour runner-2ff898c1-2219-5d0c-8892-5894c1891da4Create a file called bitbicket-pipelines.yml with just one single step to display a simple echo. Something important is to put all the labels you set for your runnner. In case you aren’t familiar with yaml here is a small tutorial from Dojo Five

pipelines:

default:

- step:

name : Echo

runs-on:

- self.hosted

- linux

script:

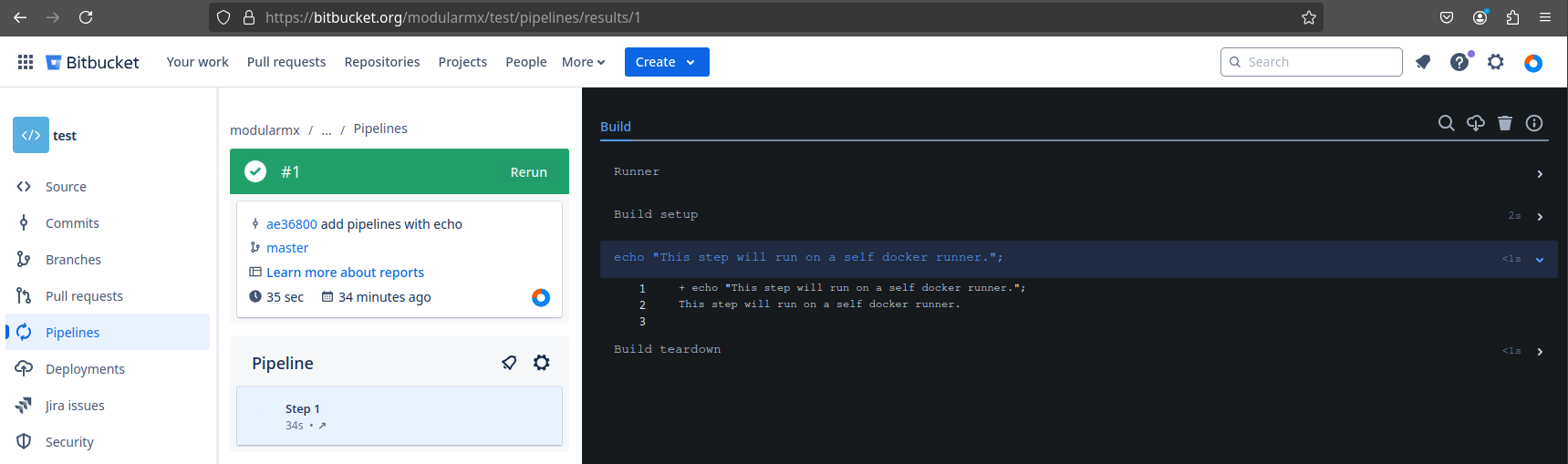

- echo "This step will run on a self docker runner.";Git add, commit, and push. Then, take a look at your pipelines in your Bitbucket repo. They should run successfully with no errors. Of course, it's just a simple echo with no interaction with our code, but we can see that the pipelines are running fine.

This was a simple warm-up, just to refresh your memory on how to set up a pipeline in Bitbucket. What we really want to do now is test whether our project builds correctly, passes unit tests, and so on. For example, if we want to add a second stage specifically to build the project, we can update the bitbucket-pipelines.yml file with the following lines.

Go ahead and add, commit, and push the changes to test the pipeline. (Note: You need to have GCC and Make installed on your local machine.)

pipelines:

default:

- step:

name: Echo

runs-on:

- self.hosted

- linux

script:

- echo "This step will run on a self docker runner.";

- step:

name: Build

runs-on:

- self.hosted

- linux

script:

- makeIt is important to note these pipelines we set-up are running in our own local machine, meaning make command has been called from our computer it does not run in Bitbucket server or somewhere else.

Using our own Images

The last pipeline using Make locally was fine, but it’s not what we want. We want to build the project using a toolchain inside a container. For the following steps, first remove the previous main.c and Makefile (version this change, but do not push it to the remote repo). Then, fetch our template repository and rebase it (make sure to resolve all conflicts). Don’t push anything yet

$ git remote add template git@bitbucket.org:modularmx/template-g0.git

$ git fetch template

$ git rebase template/masterPrepare a new image for our cross-compiler, arm-none-eabi-gcc, using Alpine. The Dockerfile can be placed somewhere outside our repo if you don't want to version it, or you can place it in the .gitignore file. Which option is best is up to you.

# Fetch a new image from alpine version 3.20

FROM alpine:3.20

# install openocd version 12.0 revision 0

RUN apk add make=4.4.1-r2 gcc-arm-none-eabi=14.1.0-r0 newlib-arm-none-eabi=4.4.0.20231231-r0Upload this image to Docker Hub or any other registry account you have, but it needs to be public (for now). I’m going to name my new image arm-none-eabi-gcc:14

$ docker build -t <username>/arm-none-eabi-gcc:14 .

$ doker push <username>/arm-none-eabi-gcc:14Our pipeline will remain mostly the same, but with one very important new feature: we’ll add a first line with the name of our image to indicate that we want to use it in every step of our pipeline.

image: <username>/arm-none-eabi-gcc:14

pipelines:

default:

- step:

name: Echo

runs-on:

- self.hosted

- linux

script:

- echo "This step will run on a self docker runner.";

- step:

name: Build

runs-on:

- self.hosted

- linux

script:

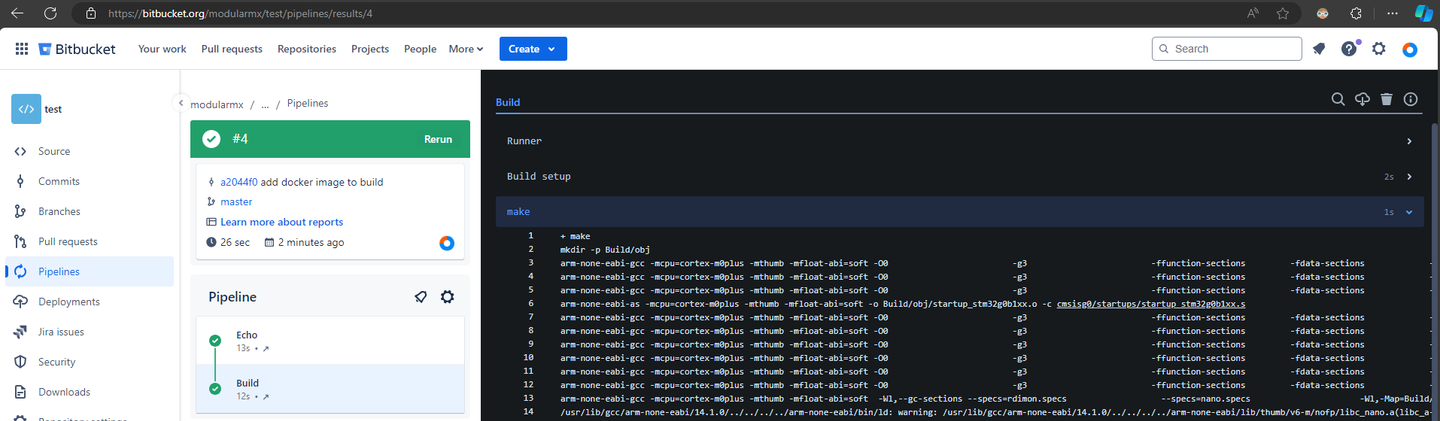

- makedocker start <name of your container>Git again, and this time push all your changes with the -f flag (because we rebased): git push origin master -f. See how our program builds flawlessly, and guess what? You don’t have anything installed on your local machine!

Multiple images

In the previous example, we set one Docker image for all the required steps, but we can also use different images for different steps. Let’s create an example for a unit test using Ceedling. First, add two files called dummy.h and dummy.c, and provide a simple add function

#ifndef __DUMMY_H__

#define __DUMMY_H__

uint32_t sum( uint32_t a, uint32_t b );

#endif // __DUMMY_H__And dummy.c

#include <stdint.h>

#include "dummy.h"

uint32_t sum( uint32_t a, uint32_t b )

{

return a + b;

}Lucky for ya our template already comes prepare to use ceedling, you only need to locate the file test_dummy.c and fill with the following test case

#include "unity.h"

#include "dummy.h"

void setUp( void )

{

}

void tearDown( void )

{

}

void test__sum__two_integers( void )

{

uint32_t res = sum( 2, 3 );

TEST_ASSERT_EQUAL_MESSAGE( 5, res, "2 + 3 = 5" );

}In our pipeline file, we set a new line for every step to indicate the image we want to use for that particular step. In the build step, we use our own image to build the project. In the second step, we use the official Ceedling image, which is also stored in Docker Hub. This is a great example of reusing something someone else has already done

pipelines:

default:

- step:

name: Build

image: account-name/arm-none-eabi-gcc:12

runs-on:

- self.hosted

- linux

script:

- make

- step:

name: Test

image: feabhas/ceedling

runs-on:

- self.hosted

- linux

script:

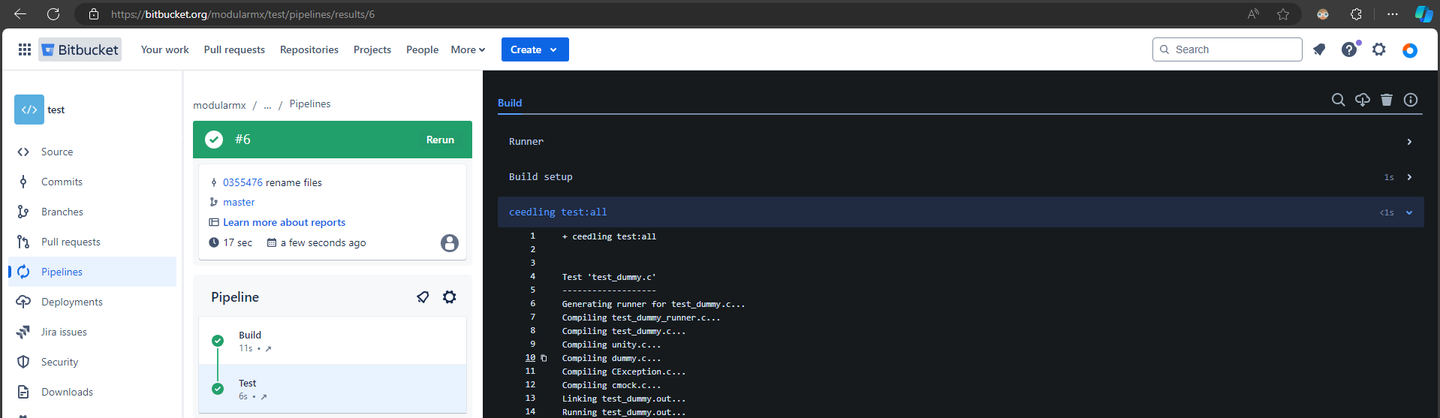

- ceedling test:allYou know what to do, Git everything and push to see how our pipeline is running and execute both steps correctly

Well , there is more you can actually do with pipelines, consider this as a brief introduction but take your time to read the documentation to know how to properly configure your pipelines for the platform you choose named, Gitlab, Jenkins, Circle CI, etc..

Link to Bitbucket pipelines official documentation: https://support.atlassian.com/bitbucket-cloud/docs/build-test-and-deploy-with-pipelines/