In the last part, we used up to three containers to set up our toolset, and we discovered that it can be a bit tedious and prone to errors. We need to do something about it. Well, Docker Compose is the solution when things get more complex. However, Docker Compose doesn't come bundled with the Docker Engine installation, so we need to install it separately using pacman with the following command:

$ sudo pacman -S docker-composeLet’s make a simple test to calibrate ourselves before going all in with Compose. Let’s pull an Alpine image and run its container. Create a new file called compose.yml in the working directory and write the following. Indentation is very important in YAML files.

services:

test: # container name

image: alpine:latest # from image alpine

command: ["echo", "Hello, World!"] # run command echo "Hello, World!"To run the container, use the compose command and pay attention to the output because there is important information we need to note besides the echo output. Take a look at the container name and the network name.

$ docker compose up

[+] Running 2/2

✔ Container test-test-1 Created

Attaching to test-1

test-1 | Hello, World!

test-1 exited with code 0We state our container should have the name “test”; however, we can see the name is test-test-1. The reason is that compose prefixes the name with a project name, which defaults to the folder name where you are building the image (in my case, a folder called “test”). Can we change this? Yes. Also, there is a network test_default created for the occasion, and it is also prefixed.

Remove the container and also the network with docker compose down

$ docker compose down

[+] Running 2/2

✔ Container test-test-1 Removed

✔ Network test_default Removed You can assign your own project name using the -p flag when you call Compose. However, you can also indicate this in the compose.yml file, just like in the code below. Additionally, add two more options to run the container in interactive mode.

name: tools #project name

services:

test: # container name

image: alpine:latest # from image alpine

stdin_open: true # equal to flag -t

tty: true # equal to flag -iRun the container in detached mode using the compose up -d command. Pay attention because this time we are using docker compose and not docker. This is important. You can use docker ps to see if it is running in the background with the new prefix.

$ docker compose up -d

✔ Network tools_default Created

✔ Container tools-test-1 Started

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

6d247efcf359 alpine:latest "/bin/sh" 6 seconds ago Up 6 seconds tools-test-1 Attach to the running container using exec, and just write an echo command to test that we can use our simple Alpine container. Once you are tired of playing around, type exit as usual.

$ docker compose exec test /bin/sh

/ # echo "hola mundo"

hola mundoAnd remove the container again

docker compose down

[+] Running 2/2

✔ Container tools-test-1 Removed

✔ Network tools_default Removed Running openocd image

Let’s see how we can run the image we already have from the previous posts with OpenOCD. If you remember, we need to define a network in order to use the container name to bind OpenOCD. However, in this case, we do not need to define anything since compose creates a default network automatically. The following YAML code is basically the equivalent of what we use in the command line with Docker.

name: tools # project name use as prefix

services:

open_server: # container name been used by the image to connect to openocd

image: open

ports: # port mapping

- 3333:3333

devices: # device mapping to usb ports

- /dev/bus/usb:/dev/bus/usbRun compose to discover how openocd connects to our board and stay there waiting for GDB connections on port 3333

$ docker compose up

docker compose up

[+] Running 2/2

✔ Network tools_default Created

✔ Container tools-open_server-1 Created

Attaching to open_server-1

open_server-1 | Open On-Chip Debugger 0.12.0

open_server-1 | Licensed under GNU GPL v2

open_server-1 | For bug reports, read

open_server-1 | http://openocd.org/doc/doxygen/bugs.html

open_server-1 | Info : The selected transport took over low-level target control. The results might differ compared to plain JTAG/SWD

open_server-1 | srst_only separate srst_nogate srst_open_drain connect_deassert_srst

open_server-1 |

open_server-1 | Info : Listening on port 6666 for tcl connections

open_server-1 | Info : Listening on port 4444 for telnet connections

open_server-1 | Info : clock speed 2000 kHz

open_server-1 | Info : STLINK V2J45M31 (API v2) VID:PID 0483:374B

open_server-1 | Info : Target voltage: 3.229863

open_server-1 | Info : [stm32g0x.cpu] Cortex-M0+ r0p1 processor detected

open_server-1 | Info : [stm32g0x.cpu] target has 4 breakpoints, 2 watchpoints

open_server-1 | Info : starting gdb server for stm32g0x.cpu on 3333

open_server-1 | Info : Listening on port 3333 for gdb connections

open_server-1 | [stm32g0x.cpu] halted due to breakpoint, current mode: Thread

open_server-1 | xPSR: 0x61000000 pc: 0x08000270 msp: 0x20023fe0Adding the buildm image

The idea is to replicate what we did in the last example from the previous code. To do that, we are going to add the previous image we made to build our project and also see how we can share our working directory with Compose. The volumes directive does the job: .:/app indicates that our current directory has been shared with the container’s /app directory.

name: tools

services:

open_server: # container name been used by the image to connect to openocd

image: open

ports: # expose port 3333

- 3333:3333

devices: # device mapping to usb ports

- /dev/bus/usb:/dev/bus/usb

buildm: # container name been used by the image to connect to make

image: build

working_dir: /app

volumes: # share current directory . with contianer /app directory

- type: bind

source: ./

target: /app

stdin_open: true # equal to flag -t

tty: true # equal to flag -iRun compose with all containers in detached mode. To connect to the container, type docker compose exec buildm /bin/bash. Once there, try to build the project with make, just for fun.

$ docker compose up -d

✔ Network tools_default Created

✔ Container tools-buildm-1 Started

✔ Container tools-open_server-1 Started

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

0ba58a346c8f open "openocd -f board/st…" 17 seconds ago Up 16 seconds 0.0.0.0:3333->3333/tcp, :::3333->3333/tcp tools-open_server-1

b57992345156 buildm "/bin/bash" 17 seconds ago Up 16 seconds tools-buildm-1

$ docker compose exec buildm /bin/bash

root@b57992345156:/app# ls

buildm.dockerfile debug.dockerfile docker-compose.yml open.dockerfile project

root@b57992345156:/app# cd project

root@b57992345156:/project# make

...Adding our debugger

Time to add the image with our debugger, the gdbgui debugger, in our compose.yml file. But first, we need to make a parenthesis. Previously, we said we couldn’t use the make target debug to automate the debugging session initialization because there wasn’t any way to ensure we run OpenOCD first. But now, thanks to compose, we can tell the debug container to wait until the open_server container is up and running. We only need to use the depends_on directive.

name: tools

services:

open_server: # container name been used by the image to connect to openocd

image: open

ports: # port mapping

- 3333:3333

devices: # device mapping to usb ports

- /dev/bus/usb:/dev/bus/usb

buildm: # container name been used by the image to connect to make

image: buildm

working_dir: /app

volumes: # volume mapping

- type: bind

source: ./

target: /app

stdin_open: true # equal to flag -t

tty: true # equal to flag -i

debug: # container name been used by the image to connect to gdb

image: debug

working_dir: /app

ports: # port mapping

- 5000:5000

volumes: # volume mapping

- type: bind

source: ./

target: /app

depends_on: # run until open_server is up and running first

- open_server

stdin_open: true # equal to flag -t

tty: true # equal to flag -iOpen the Makefile from your project and locate the following lines. Modify them to call gdbgui with the make debug target and instruct it to take the .gdbinit file and process the commands we will place there. I know it’s a long line…

#---launch a debug session, NOTE: is mandatory to previously open a debug server session-----------

debug :

# arm-none-eabi-gdb Build/$(TARGET).elf -iex "set auto-load safe-path /"

/root/.local/bin/gdbgui "--gdb-cmd=/usr/bin/arm-none-eabi-gdb", --gdb-cmd='/usr/bin/arm-none-eabi-gdb -x=project/.gdbinit', "-r" .gdbinit file is located. This is why we need to manually specify its location. In our case, we do this with x=project/.gdbinit.Open the .gdbinit file and modify it according to the code below to run the instruction connect remote host. Also, define two functions we can use in gdb to flash and reflash our board every time we change our program without closing our debugger. When the gdbgui server runs, it will call gdb, which in turn will read the .gdbinit file and execute the commands in there. For this case, it will only execute from line 1 to line 9.

#---connect to openoce debug server

target remote open_server:3333

#---define a function to flash our board

#---set abreakpoint in main and run to it

define flashing

file project/Build/Temp.elf

load

mon reset halt

break main

continue

end

#---define a function to reflash our board

define reflashing

load

mon reset halt

endNow, modify the debug Dockerfile (debug.dockerfile) to update our debug image to run gdb right when the container starts. Additionally, add a new package, make, to the list of build tools for this container.

# Fetch a new image from archlinux

FROM archlinux:base

#install build tools for our stm32g0 microcontroller, and openocd to flash our device

RUN pacman -Sy --noconfirm arm-none-eabi-gdb python python-pipx make

RUN pipx install gdbgui

# run gdb as soon the contanier start using the debug make target

ENTRYPOINT [ "make", "-f", "project/makefile", "debug" ]Maybe you’re thinking we need to run the command docker build to rebuild our debug image, but not this time. There’s a Compose directive for that. Just add build and, in our case, also indicate the Dockerfile.

debug: # container name been used by the image to connect to gdb

image: debug # image name

build:

context: . # directory where the dockefile is located

dockerfile: debug.dockerfile # docker filename to build image

working_dir: /app

ports: # port mapping

- 5000:5000

volumes: # volume mapping

- type: bind

source: ./

target: /app

depends_on: # run until open_server is up and running first

- open_server

stdin_open: true # equal to flag -t

tty: true # equal to flag -iTime to run all three containers using a single command in detached mode. Please take your time and notice how each of them is started by Compose, along with the default network. Also, type the docker ps command and verify that all three of them are running.

$ docker compose up -d

✔ Network tools_default Created

✔ Container tools-buildm-1 Started

✔ Container tools-open_server-1 Started

✔ Container tools-debug-1 Started

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

670a1a5dac2c tools-debug "make -f project/mak…" 8 minutes ago Up 8 minutes 0.0.0.0:5000->5000/tcp, :::5000->5000/tcp tools-debug-1

106c4fb79449 buildm "/bin/bash" 8 minutes ago Up 8 minutes tools-buildm-1

eff0c63270aa open "openocd -f board/st…" 8 minutes ago Up 8 minutes 0.0.0.0:3333->3333/tcp, :::3333->3333/tcp tools-open_server-1If you’ve been following the previous parts, you know the next step is to open your browser and type the IP address assigned to the debug container. But how do we know the IP address? There are two ways:

- Connect using

compose execand typeip addr show. - Inspect the network where the three containers are connected. In this case, it is

tools_default. Use the following command and locate the container data where our gdbgui is running.

$ docker network inspect tools_default

...

"a2113e07f6af5f72748e5d6608351debf6dec884035bc58169b536efffc6d428": {

"Name": "tools-debug-1",

"EndpointID": "a040e8b3c577886d2e3742d0497c29ba7427842ac0d87b869adf4d50606ed804",

"MacAddress": "02:42:ac:14:00:04",

"IPv4Address": "172.20.0.4/16",

"IPv6Address": ""

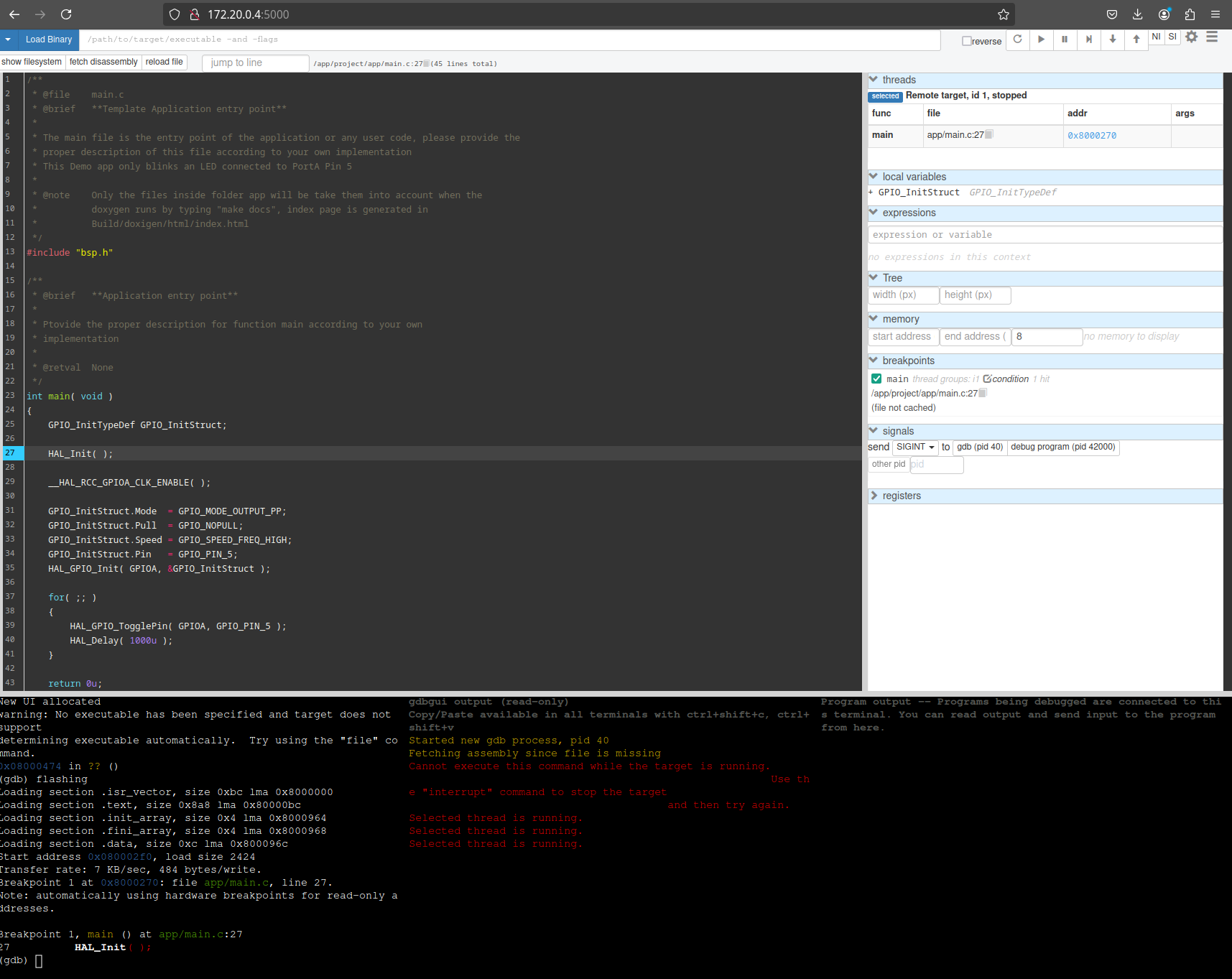

}Great! Open your browser and type that address. Then, use the command we previously defined in our .gdbinit file to flash our board. Why didn’t we flash the board right at the gdb start? Well, at this point, we don’t know if the application was previously built.

0.0.0.0:5000want to know why? Is 0.0.0.0 a Valid IP Address? | Baeldung on Computer Science

Remember, when you modify your code and want to build again, you need to connect to the buildm container using compose exec. To flash the board again, just use the second function we defined in our .gdbinit file, reflashing, in your browser.

$ docker compose exec buildm /bin/sh

/ # make

...Static IP addresses

There are going to be some cases where you will need to assign a static IP address to some or all of your containers for whatever reason you might have. For that kind of occasion, Docker Compose has the solution. It will require creating a new network (or maybe not). In the code below, you can see the networks directive used to create a new network and then assign it to each container.

name: tools

services:

open_server: # container name been used by the image to connect to openocd

image: open

ports: # port mapping

- 3333:3333

devices: # device mapping to usb ports

- /dev/bus/usb:/dev/bus/usb

networks:

static_net: # use network static_net

ipv4_address: 172.25.0.3 # assing ip address to container

buildm: # container name been used by the image to connect to make

image: buildm

working_dir: /app

volumes: # volume mapping

- type: bind

source: ./

target: /app

stdin_open: true # equal to flag -t

tty: true # equal to flag -i

networks:

static_net: # use network static_net

ipv4_address: 172.25.0.4 # assing ip address to container

debug: # container name been used by the image to connect to gdb

image: debug

build:

context: . # directory where the dockefile is located

dockerfile: debug.dockerfile # docker filename to build image

ports: # port mapping

- 5000:5000

working_dir: /app

volumes: # volume mapping

- type: bind

source: ./

target: /app

depends_on: # run until open_server is up and running first

- open_server

stdin_open: true # equal to flag -t

tty: true # equal to flag -i

networks:

static_net: # use network static_net

ipv4_address: 172.25.0.5 # assing ip address to container

networks:

static_net: # network name to be used by containers

driver: bridge # network driver

ipam:

config: # network configuration

- subnet: 172.25.0.0/16 # network subnet

gateway: 172.25.0.1 # network gatewayBuild again and test you debugger with Ip address 172.25.0.5:5000, also do a network inspect to check the new static_net network information

docker compose file names

Last but not least, the YAML file doesn’t necessarily need to be named compose.yml. You can use any name you want, but just like with Docker, you need to indicate the file using the -f flag and then the Compose command you want to run.

$ docker compose -f custom.yml upSo far, so good! Remember, at the end of the day, you should determine the best way to organize your containers and tools based on your needs. All the previous work was done in the name of teaching the different options and configurations Docker has, but there are more…