So far, we’ve been using Arch Linux images as the base to create our development images with the ARM GNU compiler and OpenOCD. The reason for this choice is that it's quite easy to install all the tools we need, thanks to the excellent community behind Arch Linux. However, there is a problem: the resulting images are quite large. Just take a look at our test image—it's 3.7GB!

[diego@DESKTOP-VD3GS75 test]$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

testimg latest 9c5452c97456 5 hours ago 3.17GB

archlinux base 0783ec448814 6 days ago 451MBInstead of using Arch Linux, we’re going to try Ubuntu, a well-known distribution that’s familiar to many. Additionally, its Docker image is quite lightweight. If you're not familiar with Linux, you should understand that there are several ways to install programs. The most common method is using the distribution’s package manager. For example, Arch uses pacman, Ubuntu uses apt, Fedora uses yum, and so on.

# Fetch a new image from archlinux

FROM ubuntu

#install build tools from our stm32g0 microcontroller and openocd to flash our device

RUN apt-get update && apt-get -y install make openocd wget xz-utilsThere’s a problem here: we can’t install the arm-none-eabi toolchain using apt, because it's not available in the official repositories. But don’t worry —on Linux, we can manually install packages. We can download the ARM GNU toolchain from its official website and place it in any folder for later use. To do this, we can use wget to download the toolchain from ARM's website, then decompress the files and add the path to our PATH environment variable.

Additionally, we need to install some extra libraries to run the toolchain. These can also be downloaded using wget, and then we can install the .deb packages manually using dpkg.

# Fetch a new image from archlinux

FROM ubuntu

#install build tools from our stm32g0 microcontroller and openocd to flash our device

RUN apt-get update && apt-get -y install make openocd wget xz-utils

# libtinfo5 and libncurses5 are needed to run our arm toolchain, download from the archive repo and install

RUN wget http://archive.ubuntu.com/ubuntu/pool/universe/n/ncurses/libtinfo5_6.2-0ubuntu2.1_amd64.deb && \

wget http://archive.ubuntu.com/ubuntu/pool/universe/n/ncurses/libncurses5_6.2-0ubuntu2.1_amd64.deb && \

wget http://archive.ubuntu.com/ubuntu/pool/universe/n/ncurses/libncursesw5_6.2-0ubuntu2.1_amd64.deb && \

dpkg -i ./libtinfo5_6.2-0ubuntu2.1_amd64.deb && dpkg -i ./libncurses5_6.2-0ubuntu2.1_amd64.deb && dpkg -i ./libncursesw5_62-0ubuntu2.1_amd64.deb && \

rm libtinfo5_6.2-0ubuntu2.1_amd64.deb libncursesw5_6.2-0ubuntu2.1_amd64.deb libncurses5_6.4-2_amd64.deb

# Download the ARM GNU toolchain using wget, decompress, and set its route to PATH variable

RUN wget https://developer.arm.com/-/media/Files/downloads/gnu/13.3.rel1/binrel/arm-gnu-toolchain-13.3.rel1-x86_64-arm-none-eabi.tar.xz && \

tar -xvf arm-gnu-toolchain-13.3.rel1-x86_64-arm-none-eabi.tar.xz -C /home/ubuntu && \

rm arm-gnu-toolchain-13.3.rel1-x86_64-arm-none-eabi.tar.xz && \

echo 'export PATH=/home/ubuntu/arm-gnu-toolchain-13.3.rel1-x86_64-arm-none-eabi/bin:$PATH' >> ~/.bashrcAfter the previous part we now have a new image to build

$ docker build -t testmin .Take a look and compare the size of the new image with the previous one built with Arch—it's almost three times smaller!

[diego@DESKTOP-VD3GS75 test]$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

testmin latest 9993aa2ce494 11 minutes ago 1.16GB

testimg latest 9c5452c97456 26 hours ago 3.17GB

archlinux base 0783ec448814 7 days ago 451MB

ubuntu latest 59ab366372d5 3 weeks ago 78.1MBOf course, you can run both images to compile and debug, just like we did with the previous images based on Arch. I’ll leave that to you. What I wanted to show is that sometimes it won’t be possible to rely on the distro’s package manager to install all the packages needed for your development environment. The way to install those extra packages can vary significantly—it doesn't depend on Docker but more on the underlying Linux system. So, some prior knowledge of Linux package management is necessary.

Here’s an example: when you have a package on your local machine and need to copy it into your Docker image for installation. We’ll copy the .deb files—libtinfo5_6.2-0ubuntu2.1_amd64.deb, libncursesw5_6.2-0ubuntu2.1_amd64.deb, and libncurses5_6.2-0ubuntu2.1_amd64.deb—from our local ~/Downloads directory to the Docker container’s home directory. Once copied, we can install them using dpkg.

# copy the deb packahes from your computer to docker image

COPY ~/Downloads/*_amd64.deb /home

# libtinfo5 and libncurses5 are needed to run our arm toolchain, download from the archive repo and install

RUN dpkg -i /home/libtinfo5_6.2-0ubuntu2.1_amd64.deb && dpkg -i /home/libncurses5_6.2-0ubuntu2.1_amd64.deb && dpkg -i /home/libncursesw5_6.2-0ubuntu2.1_amd64.deb && \

rm /home/libtinfo5_6.2-0ubuntu2.1_amd64.deb /home/libncursesw5_6.2-0ubuntu2.1_amd64.deb /home/libncurses5_6.2-0ubuntu2.1_amd64.debBuilding the image needed to start developing can be challenging in some cases, especially in embedded systems where many tools are not readily available or require special setups. However, once it’s done, it becomes quite easy to share the image/container with other developers.

Using Multiple Images

So far, we've been using a single image to create containers for different purposes. However, this is not the most common approach with Docker. Continuing like this could result in a very complex Dockerfile and large, heavy images (though this will depend on the tools we use, of course). Instead, let's create three separate images for three different containers: one for OpenOCD, one for building our software, and one for debugging our application.

OpenOCD Image

For the OpenOCD image, we will use the lightweight Alpine Linux distribution. This image will be dedicated to running OpenOCD only, and the debug server should start as soon as the container is launched. It should remain active, waiting for connections. This image does not need to share the application directory.

Create a new file called openocd.dockerfile. Our container will be named openo_server, and this is important for later steps.

# Fetch a new image from archlinux

FROM alpine

# install build tools from our stm32g0 microcontroller and openocd to flash our device

RUN apk add --no-cache openocd

# run openocd as soon the contanier start and bind to the same container IP address using its name

ENTRYPOINT [ "openocd", "-f", "board/st_nucleo_g0.cfg", "-c", "bindto open_server" ]Build Image

The second image will use Ubuntu and include the necessary tools for building our software every time we modify our code. This image will share our working directory from the host machine with a directory called app inside the container. Let’s name this Dockerfile build.dockerfile.

# Fetch a new image from archlinux

FROM ubuntu

#install build tools from our stm32g0 microcontroller and openocd to flash our device

RUN apt-get update && apt-get -y install make wget xz-utils

# libtinfo5 and libncurses5 are needed to run our arm toolchain, download from the archive repo and install

RUN wget http://archive.ubuntu.com/ubuntu/pool/universe/n/ncurses/libtinfo5_6.2-0ubuntu2.1_amd64.deb && \

wget http://archive.ubuntu.com/ubuntu/pool/universe/n/ncurses/libncurses5_6.2-0ubuntu2.1_amd64.deb && \

wget http://archive.ubuntu.com/ubuntu/pool/universe/n/ncurses/libncursesw5_6.2-0ubuntu2.1_amd64.deb && \

dpkg -i ./libtinfo5_6.2-0ubuntu2.1_amd64.deb && dpkg -i ./libncurses5_6.2-0ubuntu2.1_amd64.deb && dpkg -i ./libncursesw5_62-0ubuntu2.1_amd64.deb && \

rm libtinfo5_6.2-0ubuntu2.1_amd64.deb libncursesw5_6.2-0ubuntu2.1_amd64.deb libncurses5_6.4-2_amd64.deb

# Download the ARM GNU toolchain using wget, decompress, and set its route to PATH variable

RUN wget https://developer.arm.com/-/media/Files/downloads/gnu/13.3.rel1/binrel/arm-gnu-toolchain-13.3.rel1-x86_64-arm-none-eabi.tar.xz && \

tar -xvf arm-gnu-toolchain-13.3.rel1-x86_64-arm-none-eabi.tar.xz -C /home/ubuntu && \

rm arm-gnu-toolchain-13.3.rel1-x86_64-arm-none-eabi.tar.xz && \

echo 'export PATH=/home/ubuntu/arm-gnu-toolchain-13.3.rel1-x86_64-arm-none-eabi/bin:$PATH' >> ~/.bashrcDebugger Image

For the third image, let's go with Arch Linux—because why not? The only tool we need to install is GDB for our ARM microcontrollers. Just like we did with the OpenOCD image, we will configure the container to start the debugger as soon as it's launched. Name this Dockerfile debug.dockerfile.

# Fetch a new image from archlinux

FROM archlinux:base

#install build tools for our stm32g0 microcontroller, and openocd to flash our device

RUN pacman -Sy --noconfirm arm-none-eabi-gdb

# run gdb debugger as soon as the container runs

ENTRYPOINT [ "arm-none-eabi-gdb" ]Time to build our three images, we are putting all of the three docker files in the same directory and to build each of them we need to specify the dockerfile using the flag -f <filename.dockerfile>, just like this:

$ docker build -f openocd.dockerfile -t openocd .

$ docker build -f build.dockerfile -t buildm .

$ docker build -f debug.dockerfile -t debug .This is going to generate three new images we can list with the images command, look how light weight is the one with openocd

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

debug latest 2d822743bdd2 4 seconds ago 641MB

buildm latest 731d92bb65a3 41 seconds ago 1.15GB

openocd latest db4268670415 About a minute ago 12.8MB

archlinux base ad78b172bfc7 2 days ago 452MB

ubuntu latest 59ab366372d5 3 weeks ago 78.1MB

alpine latest 91ef0af61f39 8 weeks ago 7.79MBTime to run each of them, starting with the one with openocd our debug server, just notice we use the flag -d on this one, because the container will run in the background, we are not going to see it, but is there connected to our board, trust me.

$ docker run -d --rm --device=/dev/bus/usb:/dev/bus/usb -p 3333:3333 --network mynet --name open_server openocdFor the second one, notice we are not connecting this container to mynet network because there is no necessary (but maybe is a good practice to put all of them in the same network, i don’t know). Build your project once the container is running

$ docker run -it --rm -w /app --mount type=bind,src="$(pwd)",dst=/app buildm

# cd project

# makeI’m assuming you clone the template project as usual and your working directory looks like this, I’m also assuming you will run all of them from this directory

$ tree -L 1

.

├── buildm.dockerfile

├── debug.dockerfile

├── open.dockerfile

└── project

2 directories, 3 filesFinally, for the third container, which will run our debugger, it needs to be connected to the same network as the first container (OpenOCD). This is because the debugger must connect to OpenOCD using port 3333. Additionally, we need to run it in interactive mode.

$ docker run -it --rm -w /app --mount type=bind,src="$(pwd)",dst=/app --network mynet debugAt this point, we have three containers running, each from a different image. We use the second container to build our project with make, while the first container is running OpenOCD, with a connection open on port 3333, waiting for GDB to connect.

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

f95b4cc44b85 debug "arm-none-eabi-gdb" 16 seconds ago Up 16 seconds zealous_newton

511f1c8b08c6 buildm "/bin/bash" About a minute ago Up About a minute relaxed_raman

929aed677d64 open "openocd -f board/st…" About a minute ago Up About a minute open_serverOn container debug (the third one) lets load our program we build with the second one, just input the following instruction target extended-remote open_server:3333 to connect with openocd

(gdb) target extended-remote open_server:3333

Remote debugging using open_server:3333

warning: No executable has been specified and target does not support

determining executable automatically. Try using the "file" command.

0x080002f0 in ?? ()Yeah! We’re connected to our board. Now, simply indicate the ELF binary to load with the file command, flash the memory using load, and then apply a reset with mon reset halt. After that, the sky's the limit—start debugging!

(gdb) file project/Build/temp.elf

A program is being debugged already.

Are you sure you want to change the file? (y or n) y

Reading symbols from project/Build/temp.elf...

(gdb) load

Loading section .isr_vector, size 0xbc lma 0x8000000

Loading section .text, size 0x8a8 lma 0x80000bc

Loading section .init_array, size 0x4 lma 0x8000964

Loading section .fini_array, size 0x4 lma 0x8000968

Loading section .data, size 0xc lma 0x800096c

Start address 0x080002f0, load size 2424

Transfer rate: 7 KB/sec, 484 bytes/write.

(gdb) mon reset halt

Unable to match requested speed 2000 kHz, using 1800 kHz

Unable to match requested speed 2000 kHz, using 1800 kHz

[stm32g0x.cpu] halted due to debug-request, current mode: Thread

xPSR: 0xf1000000 pc: 0x080002f0 msp: 0x20024000

(gdb) If you change your code and rebuild it, you can reflash the board by using the load command and then applying another reset with mon reset halt.

You might be wondering, Why didn’t we use the make debug target from the Makefile like in the previous post? Well, if we did that, GDB would automatically run the commands in the .gdbinit file, assuming that OpenOCD is already running. But I didn’t want to assume that for this example. There are many ways to configure the debugger’s startup using GDB scripting, but I’ll leave that to you as an exercise. This is primarily meant to teach you about containers, not GDB.

Updating the Debugger

Why do we need up to three containers? Well, in reality, you may not need all of them—or maybe you will. It all depends on how your team decides to structure the workflow. This approach offers a lot of flexibility, but at the cost of maintaining multiple images and containers. Sure, maybe this example doesn’t require all this complexity, but the goal is to teach you as much as possible about Docker, and allow you to decide how to implement your own workflow.

Now, let's modify your Dockerfile to include Python, pipx, and gdbgui, a web-based visual frontend for the GDB debugger.

# Fetch a new image from archlinux

FROM archlinux:base

#install build tools from our stm32g0 microcontroller and openocd to flash our device

RUN pacman -Sy --noconfirm python python-pipx arm-none-eabi-gdb

#install gdbgui

RUN pipx install gdbgui

# run gdbgui as soon the contanier starts

ENTRYPOINT [ "/root/.local/bin/gdbgui", "--gdb-cmd=/usr/bin/arm-none-eabi-gdb", "-r" ]Build again with the same name and run the container as usual to see now there is a web server running in the container, take a look at the ip address and port, and expose the port 5000

$ docker build -f debug.dockerfile -t debug .

...

$ docker run -it --rm -w /app --mount type=bind,src="$(pwd)",dst=/app --network mynet -p 5000:5000 debug

Warning: authentication is recommended when serving on a publicly accessible IP address. See gdbgui --help.

View gdbgui at http://172.18.0.3:5000

View gdbgui dashboard at http://172.18.0.3:5000/dashboard

exit gdbgui by pressing CTRL+C

* Serving Flask app 'gdbgui.server.app'

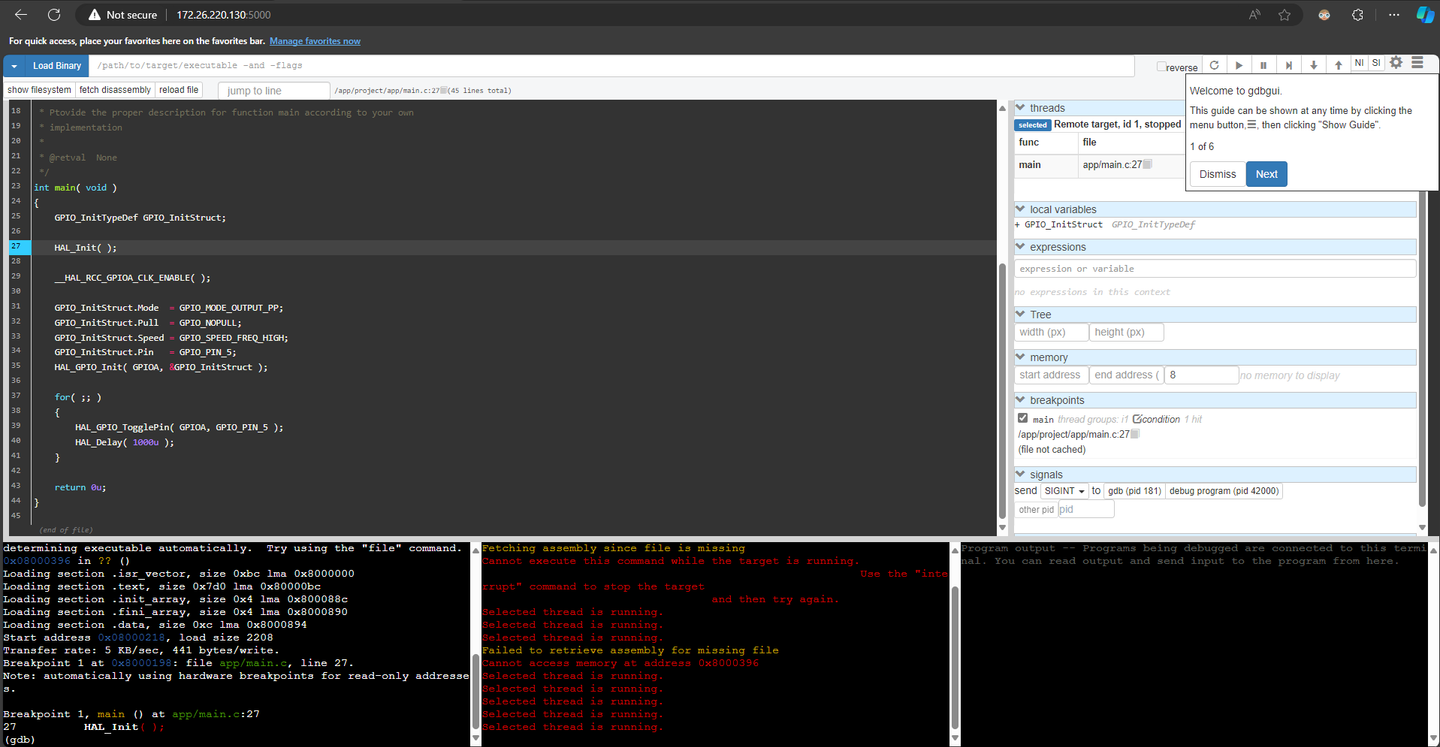

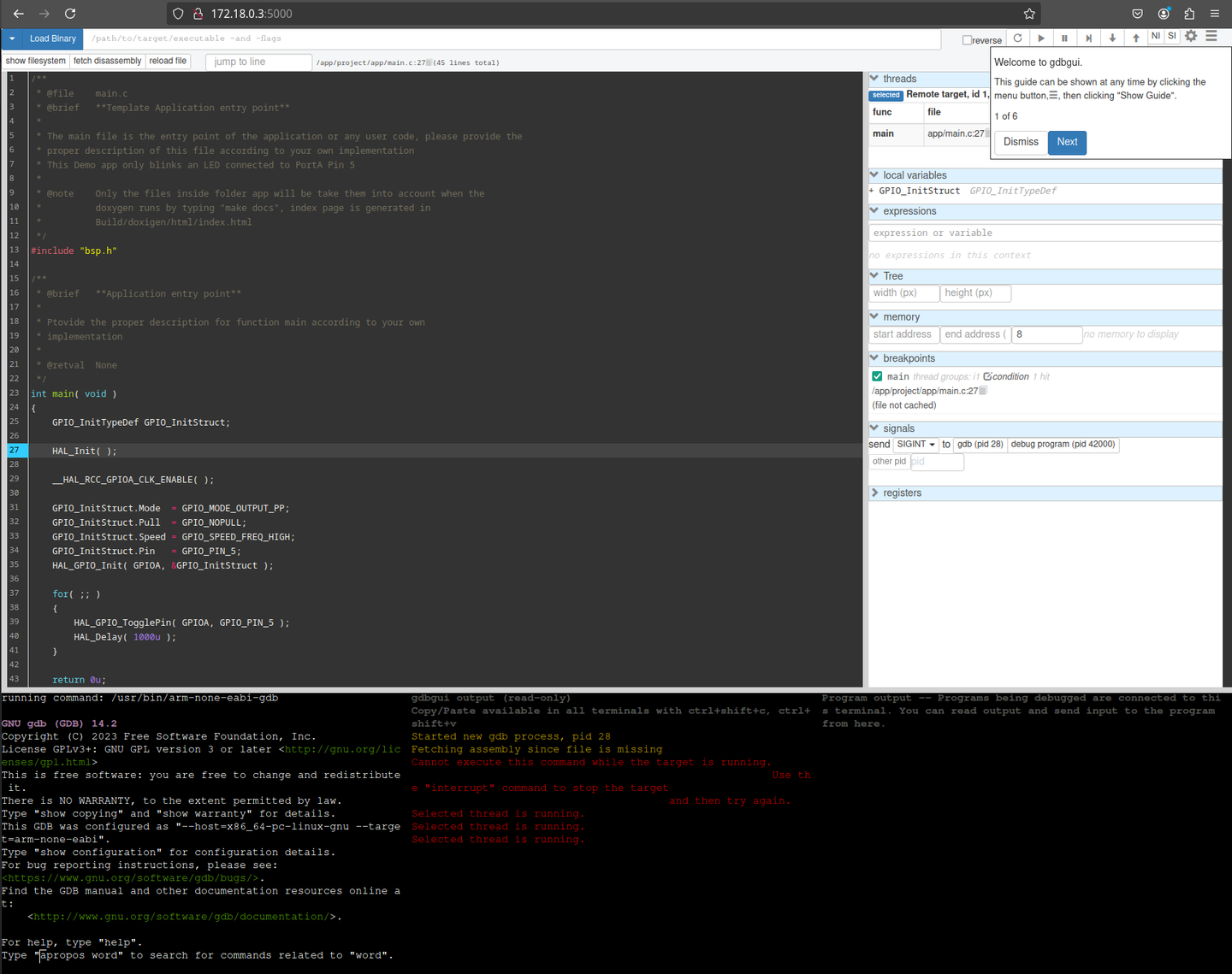

* Debug mode: offOpen you browser and type the IP address and the port, this will display a page with gdbgui running, in my case is http://172.17.0.3:5000

To run our program we need to connect to openocd, and basically input the same instructions as before but this time in the gdbgui terminal, (bottom left corner of the screen), I leave here the list of instructions.

(gdb) target extended-remote open_server:3333

...

(gdb) file project/Build/temp.elf

...

(gdb) load

...

(gdb) mon reset halt

...

(gdb) break main

...

(gdb) continue

Continuing.

Breakpoint 1, main () at app/main.c:27

27 HAL_Init( );For Windows and WSL users, you’ll need to use the IP address assigned to your WSL instance. To find the address, simply run the command ip addr show.

$ [diego@DESKTOP-VD3GS75 ~]$ ip addr show

...

...

2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc mq state UP group default qlen 1000

link/ether 00:15:5d:04:a4:15 brd ff:ff:ff:ff:ff:ff

inet 172.26.220.130/20 brd 172.26.223.255 scope global eth0

...

...On your windows explorer use the the address to display gdbgui, in my case is http://172.26.220.130:5000