In a multitasking system, there is potential for conflict if one task start to access a resource, but does not complete its access before being transitioned out of the Running State. If the task leaves the resource in an inconsistent state, then access to the same resource by any other task or interrupt could result in data corruption. For instance:

- Task A executes and starts to write "Hello world" to LCD

- Task A is pre-empted by task B after outputting just the beginning of "Hello w"

- Task B writes "Abort, Retry?" to LCD before entering the Blocked state

- Task A continues from the point at which it was pre-empted and completes outputting "orld"

- The result is "Hello wAbort, Retry?orld"

Non-Atomic Access to Variables

Updating multiple members of a structure, or updating a variable that is larger than the natural word size of the architecture, are examples of non-atomic operations. If they are interrupted, they can result in data loss or corruption.

Non-Atomic operation:

/*The C code being compiled*/

GlobalVar |= 0x01;

/*the assembly code produced*/

LDR r4, [pc, #284]

LDR r0, [r4, #0x08]

ORR r0, r0, #0x01

STR r0, [r4, #0x08]Function Reentrancy

A function is reentrant if it safe to call the function from more than one task, or from both tasks and interrupts. the following code shows two fucntion.

long lvar2;

long addOneHundred( long lVar1 )

{

/*this function is not reentrant becuase is using

a global variable store in a fixed ans shared place in memory*/

lvar2 = lvar1 + 100;

return lvar2;

}

long addOneHundred( long lVar1, long lvar2; )

{

/*this function is reentrant becuase is using

only local variables created at the moment the function runs*/

lvar2 = lvar1 + 100;

return lvar2;

}Mutual Exclusion

Access to a resource that is shared between tasks, must be managed using a mutual exclusion technique, to ensure data consistency. FreeRTOS provides several features that can be used to implement mutual exclusion, but the best mutual exclusion is to design the application in such way that the application does not share any resource.

Critical Sections

Critical sections are region of code that are surrounded by calls to the special macros.

taskENTER_CRITICAL / taskEXIT_CRITICAL

This kind of critical sections works by disabling interrupts up to the interrupt priority set by configMAX_SYSCALL_INTERRUPT_PRIORITY in FreeRTOSConfig.h

- By disabling interrupts, no other context (task or ISR) can execute until the critical section is exited using

taskEXIT_CRITICAL. - Advantage: Provides complete mutual exclusion because it ensures exclusive access by the task executing the critical section.

- Disadvantage: Increases interrupt latency since all interrupts are disabled.

Critical sections must be kept very short; otherwise, they will adversely affect interrupt response times.

void vCriticalFunction( void )

{

taskENTER_CRITICAL();

GlobalVar |= 0x01;

taskEXIT_CRITICAL();

}Suspending the Scheduler

Suspending the scheduler prevents a context switch from occurring but leaves interrupts enabled.

vTaskSuspendAll / xTaskResumeAll

- These functions suspend task context switching (i.e., the scheduler is paused). However, interrupts remain enabled and can still execute.

- Once the scheduler is resumed with

xTaskResumeAll, pending tasks are scheduled again. - Advantage: Lower impact on interrupt latency since interrupts are still processed.

- Disadvantage: Does not protect against interrupts accessing the same resources, so it does not provide complete mutual exclusion.

void vPrintString(char *pcString)

{

vTaskSuspendAll();

printf("%s", pcString);

xTaskResumeAll();

}When to use each?

- Use

taskENTER_CRITICALif you need to protect resources or data from both tasks and interrupts. - Use

vTaskSuspendAllif you only need to prevent conflicts between tasks and access from interrupts is not a concern.

Mutexes

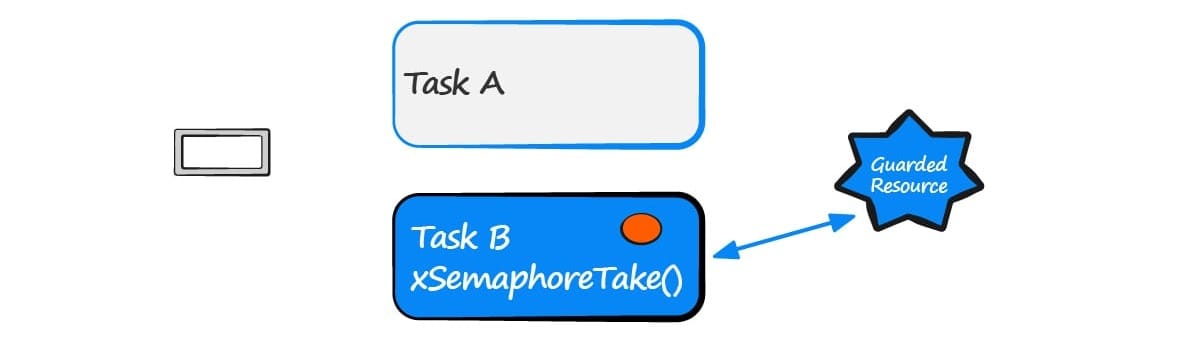

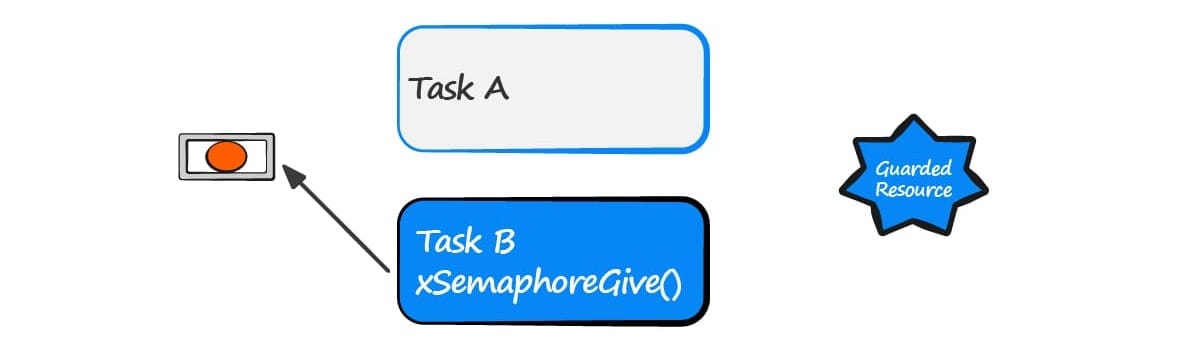

A mutex is a special type of binary semaphore that is used to control access to a resource that is shared between two or more tasks. When used in a mutual exclusion scenario, the mutex can be thought of as a token that is associated with the resource being shared.

Two task each want to access the resource, but a task is not permitted to access the resource unless it is the mutex holder

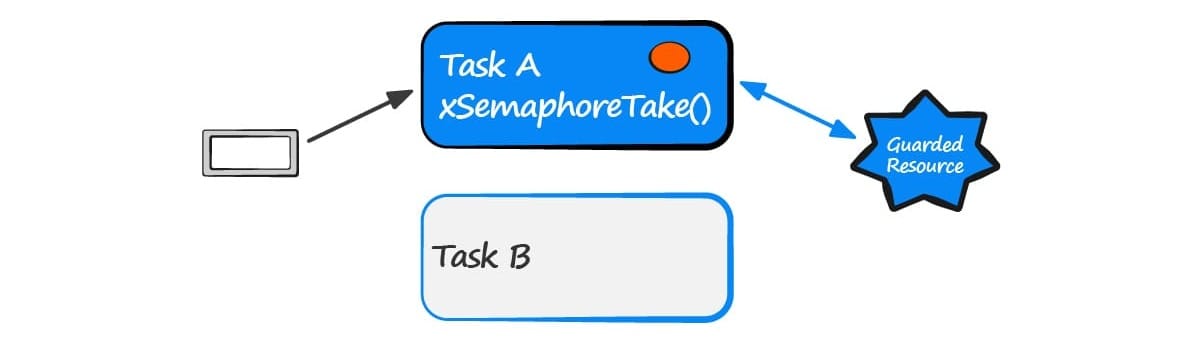

Task A attempts to take the mutex. Because the mutex is available Task A successfully becomes the mutex holder so is permitted to access the resource

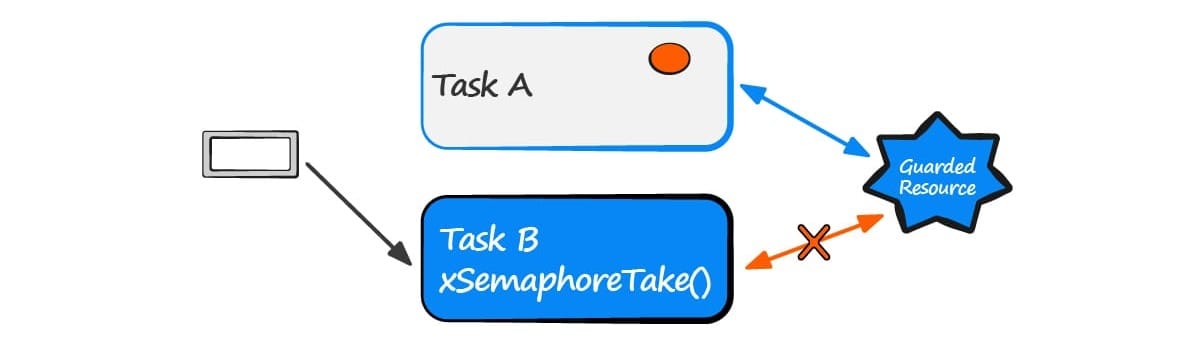

Task B executes and attempts to take the same mutex. Task A still has the mutex so the attempt fails and Task B is not permitted to access the resource

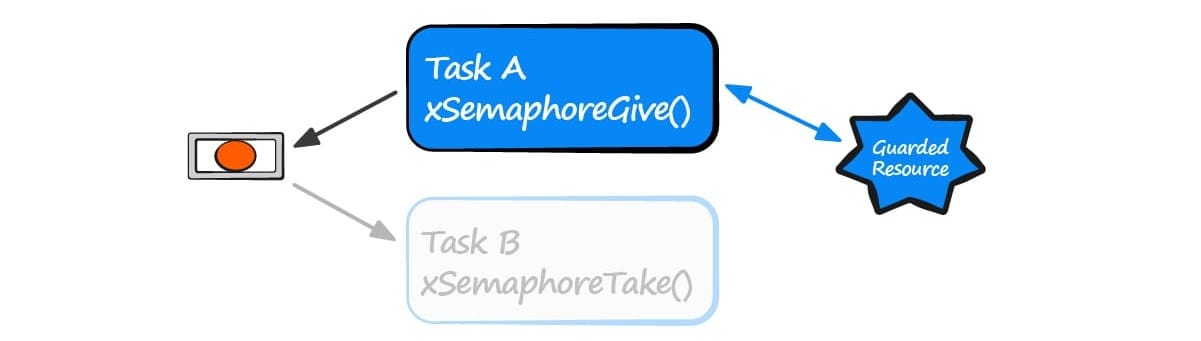

Task B opts to enter the Blocked state to wait for the mutex allowing Task A to run again. Task A finishes with the resource so gives the mutex back

Task A giving the mutex back causes Task B to exit the Blocked state. Task B can now successfully obtain the mutex, and having done so is permitted to access

When Task B finishes accessing the resource it too gives the mutex back. The mutex is now once again available to both tasks.

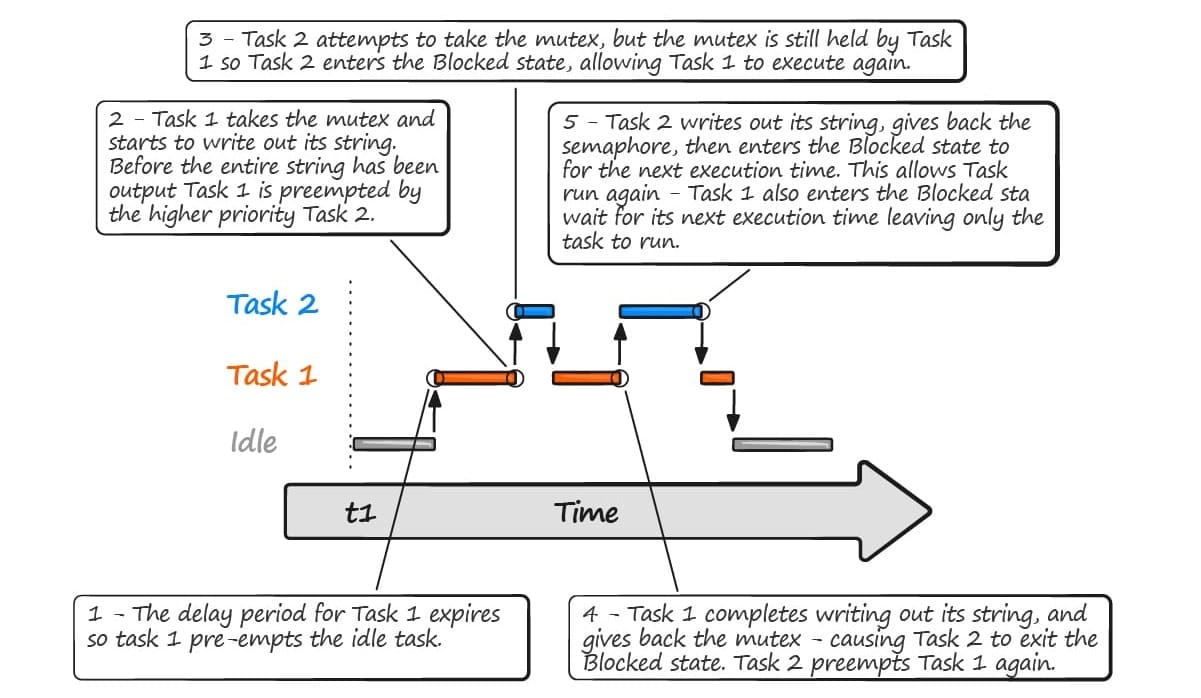

The previous example is translated to the following code

xSemaphoreHandle xMutex;

int main( void )

{

vSetupHardware();

xMutex = xSemaphoreCreateMutex();

srand( 567 );

xTaskCreate( vPrintTask, "Print1", 240, "Task1***\n", 1, NULL );

xTaskCreate( vPrintTask, "Print2", 240, "Task2---\n", 2, NULL );

vTaskStartScheduler();

}

void prvNewPrintString( const portCHAR *pcString )

{

xSemaphoreTake( xMutex, portMAX_DELAY );

{

printf( "%s", pcString );

flush( stdout );

}

xSemaphoreGive( xMutex );

}

void vPrintTask( void *pvParameters )

{

char *pcStringToPrint;

pcStringToPrint = ( char * ) pvParameters;

for( ;; )

{

prvNewPrintString( pcStringToPrint );

vTaskDelay( ( rand() & 0x1FF ) );

}

}Time diagram

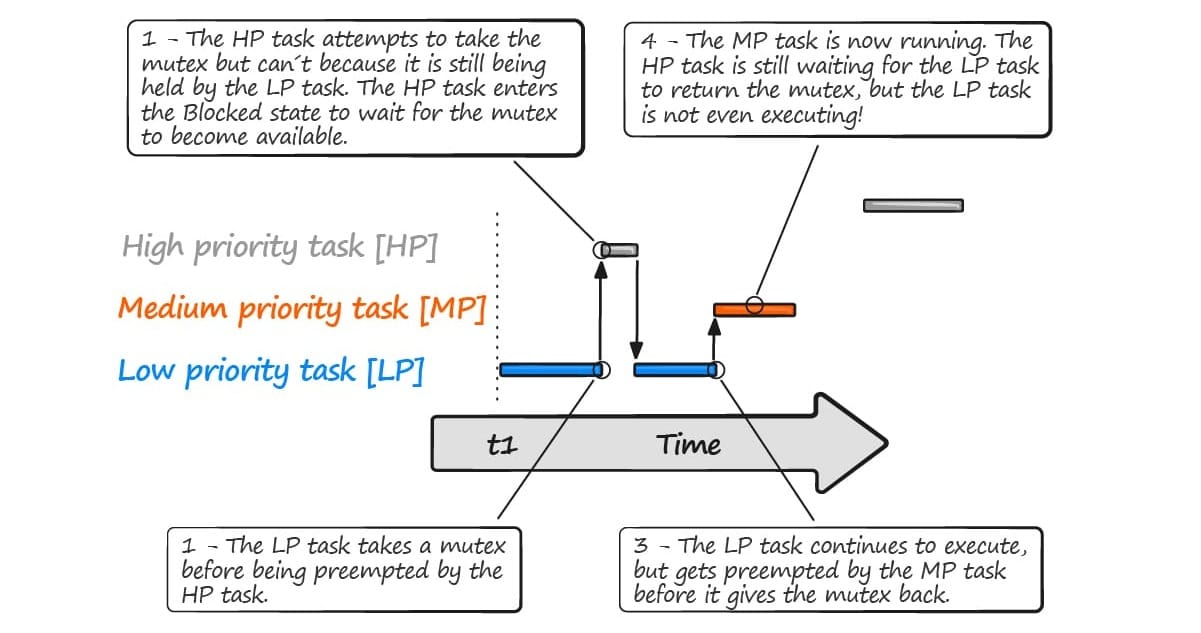

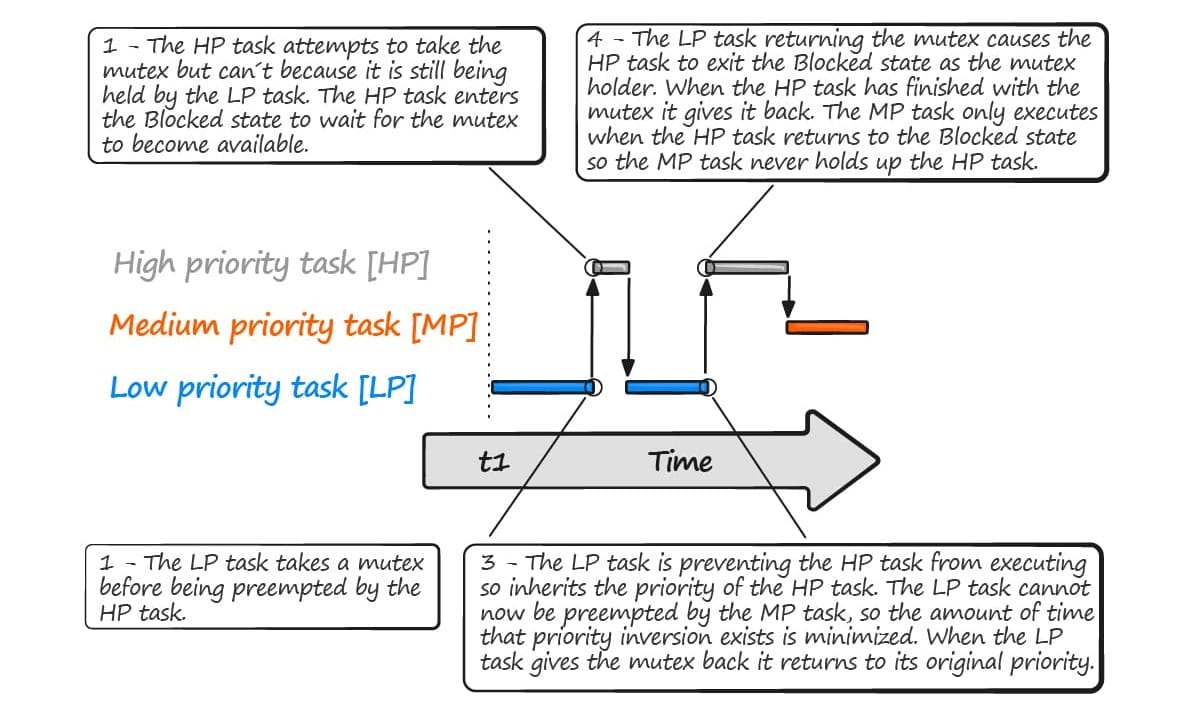

Priority Inversion

There is one potential pitfall of using a mutex to provide mutual exclusion. “A higher priority task being delayed by a lower priority task” And things can get worse if we add a medium priority task, as shown in the image

FreeRTOS mutexes include a basic priority inheritance mechanism. which minimize the negative effect of priority inversion. It does not fixed but lessens its impact. The low priority task that holds the mutex, inherits the priority of the task waiting for the mutex. It means, raises its own priority during the time that is holding the mutex.

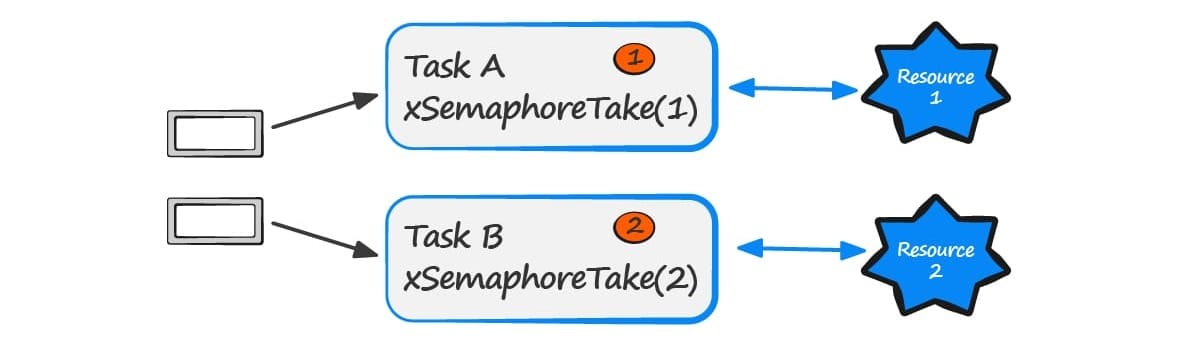

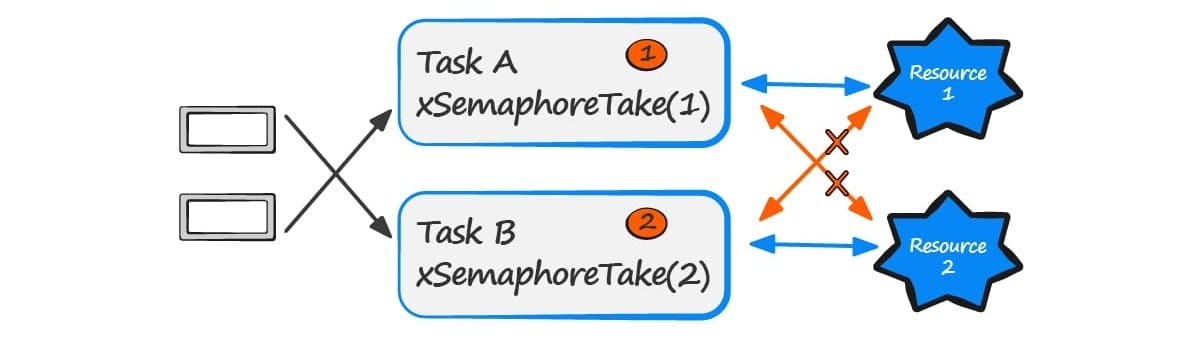

Deadlock

Deadlock is another potential pitfall that occur when using mutexes for mutual exclusion. Occurs when two task cannot proceed because they are both waiting for a resource that is held by the other.

Both tasks take one different mutex, so access two different resources.

Both tasks try to take the resource that holds the other one without given back the mutex that already hold them.

Recursive Mutexes

It is also possible for a task to deadlock with itself. This will happen if a task attempts to take the same mutex more than once, without first returning the mutex. Consider the following scenario:

- A task successfully obtains a mutex.

- While holding the mutex, the task calls a library function.

- The implementation of the library function attempts to take the same mutex, and enters the Blocked state to wait for the mutex to become available.

At the end of this scenario the task is in the Blocked state to wait for the mutex to be returned, but the task is already the mutex holder. A deadlock has occurred because the task is in the Blocked state to wait for itself.

This type of deadlock can be avoided by using a recursive mutex in place of a standard mutex. A recursive mutex can be ‘taken’ more than once by the same task, and will be returned only after one call to ‘give’ the recursive mutex has been executed for every preceding call to ‘take’ the recursive mutex.

Standard mutexes and recursive mutexes are created and used in a similar way:

- Standard mutexes are created using xSemaphoreCreateMutex(). Recursive mutexes are created using xSemaphoreCreateRecursiveMutex(). The two API functions have the same prototype.

- Standard mutexes are ‘taken’ using xSemaphoreTake(). Recursive mutexes are ‘taken’ using xSemaphoreTakeRecursive(). The two API functions have the same prototype.

- Standard mutexes are ‘given’ using xSemaphoreGive(). Recursive mutexes are ‘given’ using xSemaphoreGiveRecursive(). The two API functions have the same prototype.

Code Example

/* Recursive mutexes are variables of type SemaphoreHandle_t. */

SemaphoreHandle_t xRecursiveMutex;

/* The implementation of a task that creates and uses a recursive mutex. */

void vTaskFunction( void *pvParameters )

{

const TickType_t xMaxBlock20ms = pdMS_TO_TICKS( 20 );

/* Before a recursive mutex is used it must be explicitly created. */

xRecursiveMutex = xSemaphoreCreateRecursiveMutex();

/* Check the semaphore was created successfully. */

configASSERT( xRecursiveMutex );

for( ;; )

{

/* Take the recursive mutex. */

if( xSemaphoreTakeRecursive( xRecursiveMutex, xMaxBlock20ms ) == pdPASS )

{

/* The recursive mutex was successfully obtained. The task can now access

the resource the mutex is protecting. At this point the recursive call

count (which is the number of nested calls to xSemaphoreTakeRecursive())

is 1, as the recursive mutex has only been taken once. */

/* While it already holds the recursive mutex, the task takes the mutex

again. In a real application, this is only likely to occur inside a sub-

function called by this task, as there is no practical reason to knowingly

take the same mutex more than once. The calling task is already the mutex

holder, so the second call to xSemaphoreTakeRecursive() does nothing more

than increment the recursive call count to 2. */

xSemaphoreTakeRecursive( xRecursiveMutex, xMaxBlock20ms );

/* ... */

/* The task returns the mutex after it has finished accessing the resource

the mutex is protecting. At this point the recursive call count is 2, so

the first call to xSemaphoreGiveRecursive() does not return the mutex.

Instead, it simply decrements the recursive call count back to 1. */

xSemaphoreGiveRecursive( xRecursiveMutex );

/* The next call to xSemaphoreGiveRecursive() decrements the recursive call

count to 0, so this time the recursive mutex is returned.*/

xSemaphoreGiveRecursive( xRecursiveMutex );

/* Now one call to xSemaphoreGiveRecursive() has been executed for every

proceeding call to xSemaphoreTakeRecursive(), so the task is no longer the

mutex holder.

}

}

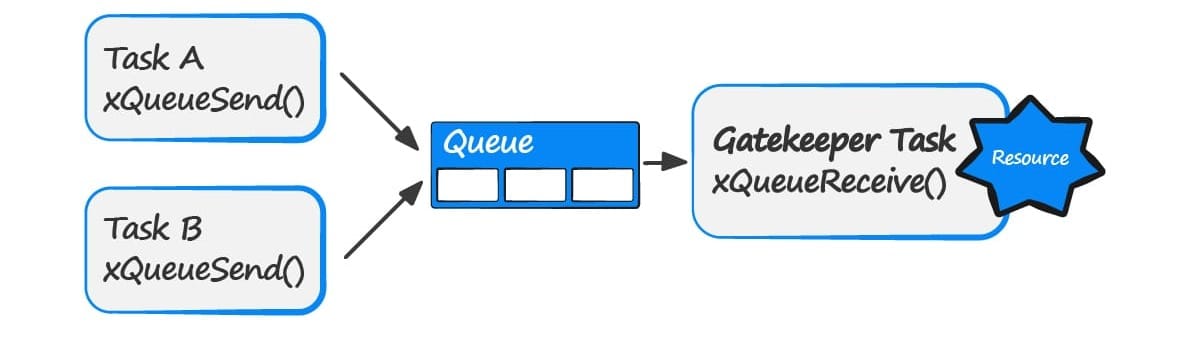

}Gatekeeper Task

Gatekeeper tasks provide a clean method of implementing mutual exclusion without the risk of priority inversion or deadlock. A gatekeeper task is a task that has sole ownership of a resource. Only the gatekeeper task is allowed to access the resource directly.

Two task indirectly access to a resource. Both send information to a gatekeeper task who is in control of the resource

GateKeeper code example:

QueueHandle_t gatequeue;

int main( void )

{

vSetupHardware();

gatequeue = xQueueCreate(5, sizeof(char*));

srand( 567 );

/* Create two instances of the tasks that send messages to the gatekeeper. The

tasks are created at different priorities so the higher priority task will

occasionally preempt the lower priority task. */

xTaskCreate(vPrintTask, "print1", 240, "Task1*****************\n", 1, NULL);

xTaskCreate(vPrintTask, "print2", 240, "Task2-----------------\n", 2, NULL);

/* Create the gatekeeper task. This is the only task that is permitted

to directly access standard out. */

xTaskCreate(vPrinter, "gatekeeper", 240, NULL, 0, NULL);

vTaskStartScheduler();

}

void vPrintTask(void *parameters)

{

char * taskMessage = (char*)parameters;

for(;;)

{

/* Print out the string, not directly, but instead by passing a pointer to

the string to the gatekeeper task via a queue. A block time is not

specified because there should always be space in the queue. */

xQueueSend(gatequeue,&taskMessage,0);

vTaskDelay((rand() & 0x7)); //random delay in range (0ms - 7ms)

}

}

void vPrinter(void *parameters)

{

/* This is the only task that is allowed to write to standard out. Any other

task wanting to write a string to the output does not access standard out

directly, but instead sends the string to this task. As only this task accesses

standard out there are no mutual exclusion or serialization issues to consider

within the implementation of the task itself. */

char* printMessage;

for(;;)

{

/* Wait for a message to arrive.*/

xQueueReceive(gatequeue, &printMessage, portMAX_DELAY);

/* Output the received string. */

printf("%s", printMessage);

/* Loop back to wait for the next message. */

}

}Code Snippets

- MUTEX: DeadLock

- MUTEX: DeadLock with timeout for debug

- MUTEX: Priority inversion

- MUTEX: Code protection using Mutex

- MUTEX: Recursive Mutex

- MUTEX: Interrupts and Mutex

Exercises (printf)

- Create a program to use the mutex semaphore, two tasks must be created, the first task with a periodicity of 600ms and the second task with a period of 200ms. The first task going to take the mutex and give it until the task finishes, second task going to try to get the semaphore. Prints messages on the terminal to identify each task.Task_1 Priority: 2, Task_2" Priority: 1

- Develop a program to block a part of code, create a function to protect the code inside, function must contain a message to be displayed on the screen, and create two tasks that going to take and give the mutex semaphore. The message must be different for each task. Task_1 period: 100ms, Task_2" period: 50ms.

- Modify the last example, now the function will send the message using a Queue to another task. The new task “ReadMsg“ going to be executed every 1 second to read all the messages on the queue, the tasks created in the last example must not send new data to the Queue while the new task reads all the buffer, and continue send data new when task “ReadMsg“ finishes. Use another mutex to prevent Task1 and Task2 for sending new data while reading. Task_1 period: 600ms Priority: 2, Task_2" period: 200ms Priority: 2, ReadMsg Priority: 1.

Exercises

- Develop a program using a recursive mutex. Create two tasks: the first task will turn on 4 out of 8 LEDs (e.g., LED0, LED1, LED2, LED3) by calling a higher-level function that internally calls another function to control the LED range, both requiring the recursive mutex. The second task will turn off all LEDs after acquiring the same mutex. Once the second task finishes turning off the LEDs, the first task will proceed to turn on the next 4 LEDs (e.g., LED4, LED5, LED6, LED7). The process will continue in a loop, demonstrating the use of the recursive mutex to handle nested function calls within the same task.

- Modify the previous program, let's add two buttons. Now with buttons, we can select which part of the series of LEDs control. The first button is to control the first part of the series and the second button is to control the other part.

- Modify example 2 and use a counter event using semaphores instead of the recursive mutex. Now you should use the mutex to prevent raised conditions between tasks.